Code

import pandas as pd

import numpy as np

import pyrsm as rsm

from sklearn.model_selection import GridSearchCV

# setup pyrsm for autoreload

%reload_ext autoreload

%autoreload 2

%aimport pyrsmDuyen Tran

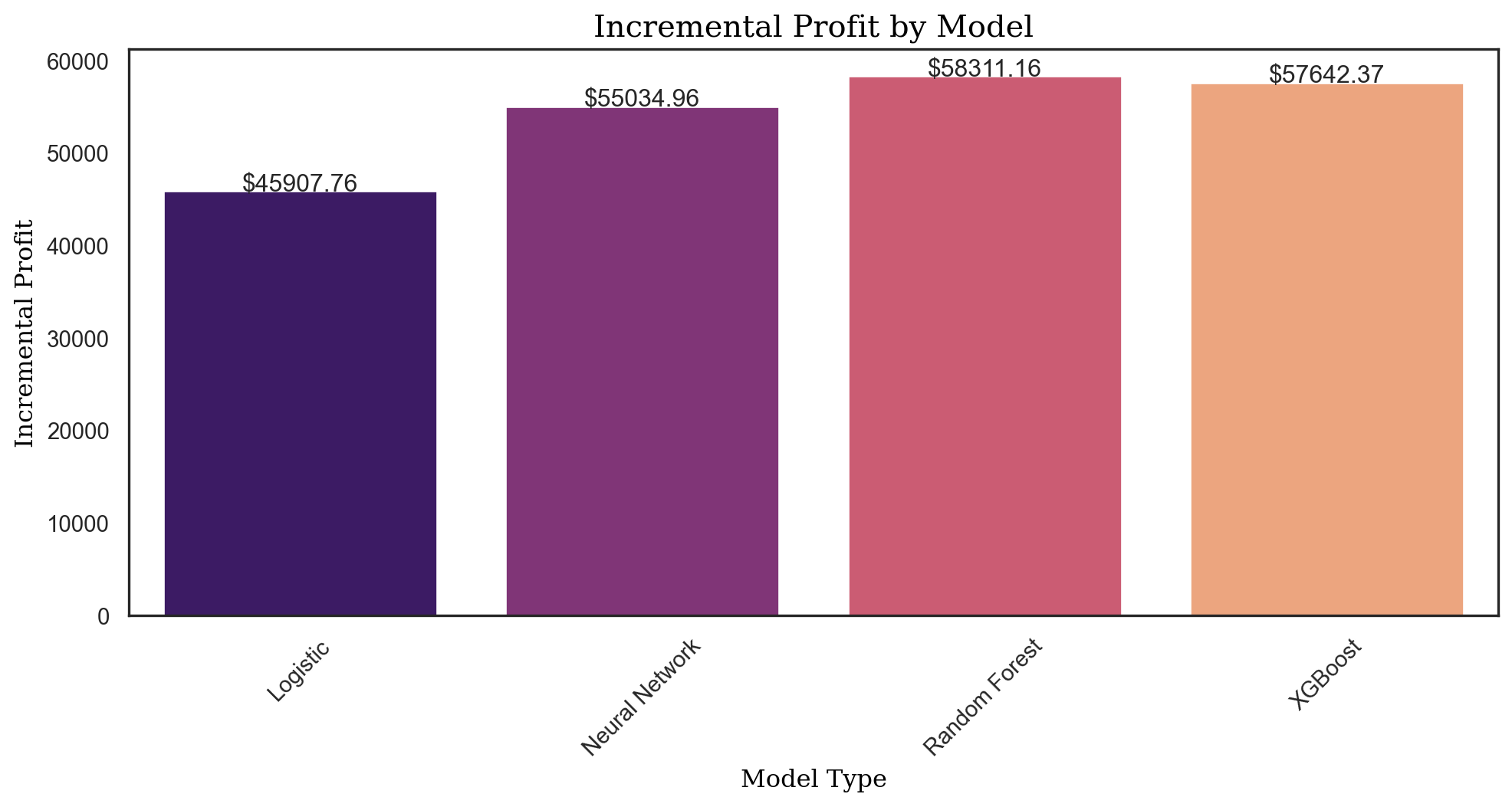

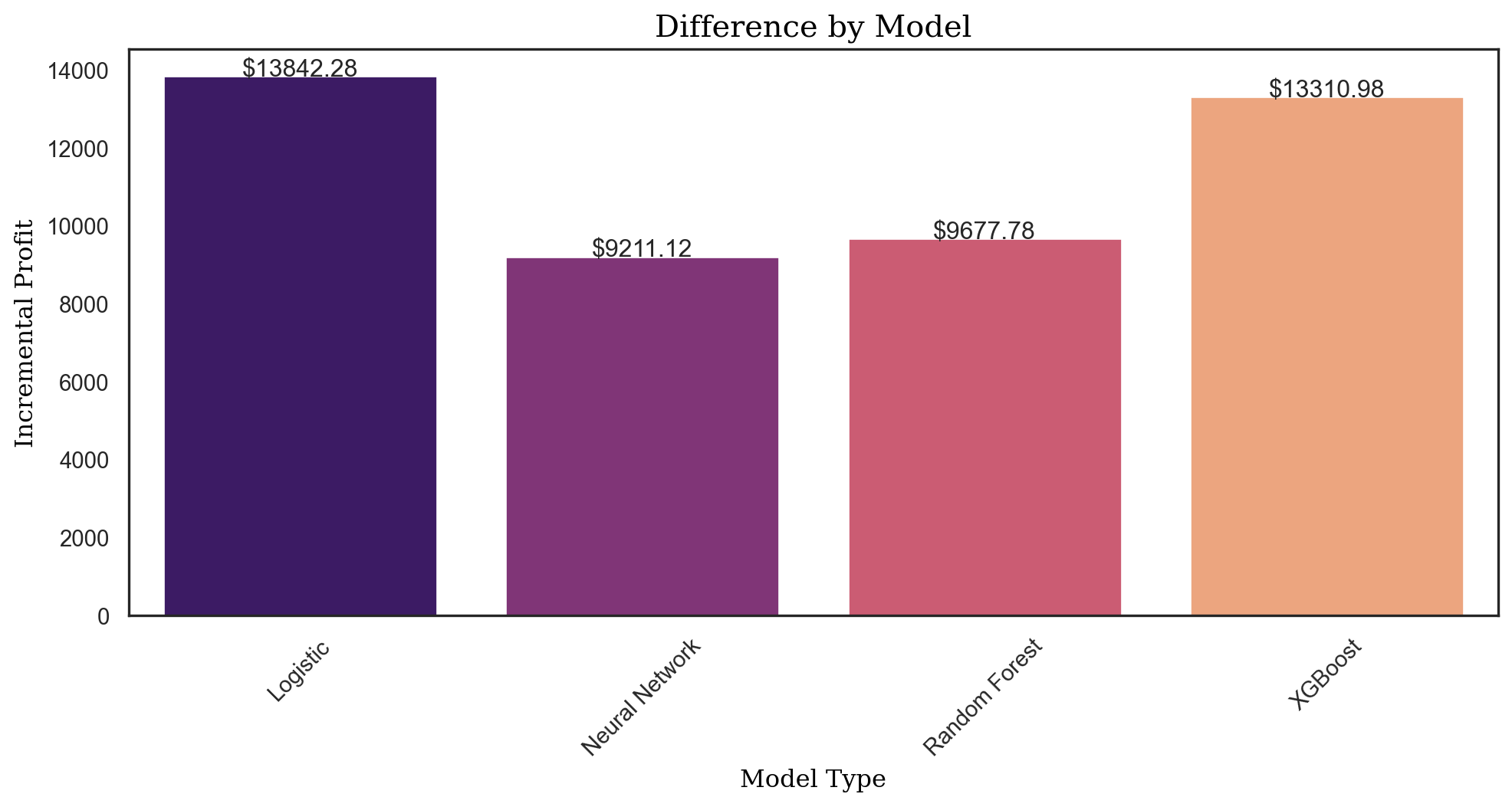

This project will provide insights into the relative strengths and weaknesses of each modeling approach in the context of direct marketing, with a particular focus on maximizing the return on investment for marketing campaigns.

Executing a comprehensive analysis of predictive modeling techniques to refine customer targeting strategies. The techniques under comparison include logistic regression, neural networks, random forests, and XGBoost.

Implementing uplift modeling to evaluate the additional impact of targeting specific customers with marketing efforts.

Employing propensity scoring methods to estimate the probability of customer responses based on historical interaction data.

Main objective: Identify the modeling technique that most effectively pinpoints the top 30,000 customers from a pool of 120,000 who would yield the highest profit when targeted.

Uplift modeling is pivotal in understanding the causal effect of the marketing action, whereas the propensity score approach focuses on the predicted likelihood of customer behavior.

Post-identification of the top-performing model, an additional objective is to analyze and establish the most profitable segment size for targeting purposes, potentially adjusting the initial 30,000 target figure to optimize outreach and profitability.

The results from this study aim to guide marketing strategies, ensuring resource allocation is both efficient and effective.

The findings are expected to serve as a decision-making framework for marketing leaders in optimizing campaign strategies and improving customer engagement and retention.

| converted | GameLevel | NumGameDays | NumGameDays4Plus | NumInGameMessagesSent | NumSpaceHeroBadges | NumFriendRequestIgnored | NumFriends | AcquiredSpaceship | AcquiredIonWeapon | TimesLostSpaceship | TimesKilled | TimesCaptain | TimesNavigator | PurchasedCoinPackSmall | PurchasedCoinPackLarge | NumAdsClicked | DaysUser | UserConsole | UserHasOldOS | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | no | 7 | 18 | 0 | 124 | 0 | 81 | 0 | yes | no | 8 | 0 | 0 | 4 | no | yes | 3 | 2101 | no | no |

| 1 | no | 10 | 3 | 2 | 60 | 0 | 18 | 479 | no | no | 10 | 7 | 0 | 0 | yes | no | 7 | 1644 | yes | no |

| 2 | no | 2 | 1 | 0 | 0 | 0 | 0 | 0 | no | no | 0 | 0 | 0 | 2 | no | no | 8 | 3197 | yes | yes |

| 3 | no | 2 | 11 | 1 | 125 | 0 | 73 | 217 | no | no | 0 | 0 | 0 | 0 | yes | no | 6 | 913 | no | no |

| 4 | no | 8 | 15 | 0 | 0 | 0 | 6 | 51 | yes | no | 0 | 0 | 2 | 1 | yes | no | 21 | 2009 | yes | no |

| converted | GameLevel | NumGameDays | NumGameDays4Plus | NumInGameMessagesSent | NumSpaceHeroBadges | NumFriendRequestIgnored | NumFriends | AcquiredSpaceship | AcquiredIonWeapon | ... | TimesKilled | TimesCaptain | TimesNavigator | PurchasedCoinPackSmall | PurchasedCoinPackLarge | NumAdsClicked | DaysUser | UserConsole | UserHasOldOS | rnd_30k | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | no | 6 | 16 | 0 | 0 | 0 | 0 | 0 | yes | no | ... | 0 | 0 | 0 | no | no | 11 | 1827 | no | no | 0 |

| 1 | no | 2 | 8 | 0 | 0 | 0 | 5 | 4 | no | no | ... | 0 | 8 | 0 | yes | no | 3 | 1889 | no | yes | 1 |

| 2 | no | 6 | 1 | 0 | 0 | 0 | 0 | 0 | no | no | ... | 0 | 0 | 0 | no | yes | 2 | 1948 | yes | no | 0 |

| 3 | yes | 7 | 16 | 0 | 102 | 1 | 0 | 194 | no | no | ... | 0 | 0 | 0 | yes | yes | 21 | 3409 | yes | yes | 0 |

| 4 | no | 10 | 1 | 1 | 233 | 0 | 23 | 0 | no | no | ... | 0 | 5 | 0 | no | yes | 4 | 2922 | yes | no | 0 |

5 rows × 21 columns

| Variable | Description |

|---|---|

converted |

Purchased the Zalon campain (“yes” or “no”) |

GameLevel |

Highest level of game achieved by the user |

NumGameDays |

Number of days user played the game in last month (with or without network connection) |

NumGameDays4Plus |

Number of days user played the game in last month with 4 or more total users (this implies using a network connection) |

NumInGameMessagesSent |

Number of in-game messages sent to friends |

NumFriends |

Number of friends to which the user is connected (necessary to crew together in multiplayer mode) |

NumFriendRequestIgnored |

Number of friend requests this user has not replied to since game inception |

NumSpaceHeroBadges |

Number of “Space Hero” badges, the highest distinction for gameplay in Space Pirates |

AcquiredSpaceship |

Flag if the user owns a spaceship, i.e., does not have to crew on another user’s or NPC’s space ship (“no” or “yes”) |

AcquiredIonWeapon |

Flag if the user owns the powerful “ion weapon” (“no” or “yes”) |

TimesLostSpaceship |

The number of times the user destroyed his/her spaceship during gameplay. Spaceships need to be re-acquired if destroyed. |

TimesKilled |

Number of times the user was killed during gameplay |

TimesCaptain |

Number of times in last month that the user played in the role of a captain |

TimesNavigator |

Number of times in last month that the user played in the role of a navigator |

PurchasedCoinPackSmall |

Flag if the user purchased a small pack of Zathium in last month (“no” or “yes”) |

PurchasedCoinPackLarge |

Flag if the user purchased a large pack of Zathium in last month (“no” or “yes”) |

NumAdsClicked |

Number of in-app ads the user has clicked on |

DaysUser |

Number of days since user established a user ID with Creative Gaming (for Space Pirates or previous games) |

UserConsole |

Flag if the user plays Creative Gaming games on a console (“no” or “yes”) |

UserHasOldOS |

Flag if the user has iOS version 10 or earlier (“no” or “yes”) |

rnd_30k |

Dummy variable that randomly selects 30K customers (1) and the remaining 90K (0) |

| converted | GameLevel | NumGameDays | NumGameDays4Plus | NumInGameMessagesSent | NumSpaceHeroBadges | NumFriendRequestIgnored | NumFriends | AcquiredSpaceship | AcquiredIonWeapon | TimesLostSpaceship | TimesKilled | TimesCaptain | TimesNavigator | PurchasedCoinPackSmall | PurchasedCoinPackLarge | NumAdsClicked | DaysUser | UserConsole | UserHasOldOS | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | no | 2 | 8 | 0 | 0 | 0 | 5 | 4 | no | no | 0 | 0 | 8 | 0 | yes | no | 3 | 1889 | no | yes |

| 1 | no | 5 | 15 | 0 | 179 | 0 | 50 | 362 | yes | no | 22 | 0 | 4 | 4 | no | no | 2 | 1308 | yes | no |

| 2 | no | 7 | 7 | 0 | 267 | 0 | 64 | 0 | no | no | 5 | 0 | 0 | 0 | no | yes | 1 | 3562 | yes | no |

| 3 | no | 4 | 4 | 0 | 36 | 0 | 0 | 0 | no | no | 0 | 0 | 0 | 0 | no | no | 2 | 2922 | yes | no |

| 4 | no | 8 | 17 | 0 | 222 | 10 | 63 | 20 | yes | no | 10 | 0 | 9 | 6 | yes | no | 4 | 2192 | yes | no |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 29995 | no | 5 | 1 | 0 | 0 | 0 | 0 | 0 | no | no | 0 | 0 | 0 | 0 | yes | yes | 11 | 2374 | no | no |

| 29996 | no | 9 | 12 | 0 | 78 | 0 | 59 | 1 | yes | no | 16 | 0 | 0 | 5 | yes | no | 2 | 1978 | yes | no |

| 29997 | no | 9 | 19 | 1 | 271 | 0 | 71 | 95 | yes | no | 14 | 0 | 0 | 3 | no | no | 2 | 2831 | yes | yes |

| 29998 | no | 10 | 23 | 0 | 76 | 6 | 20 | 107 | no | no | 38 | 0 | 1 | 0 | no | no | 9 | 3197 | yes | no |

| 29999 | no | 6 | 8 | 0 | 115 | 0 | 13 | 0 | yes | no | 5 | 0 | 0 | 4 | no | no | 11 | 2343 | yes | no |

30000 rows × 20 columns

# a. Add "ad" to cg_ad_random and set its value to 1 for all rows

cg_ad_random["ad"] = 1

# b. Add "ad" to cg_organic_control and set its value to 0 for all rows

cg_organic_control["ad"] = 0

# c. Create a stacked dataset by combining cg_ad_random and cg_organic_control

cg_rct_stacked = pd.concat([cg_ad_random, cg_organic_control], axis=0)

cg_rct_stacked['converted_yes']= rsm.ifelse(

cg_rct_stacked.converted == "yes", 1, rsm.ifelse(cg_rct_stacked.converted == "no", 0, np.nan)

)

# d. Create a training variable

cg_rct_stacked['training'] = rsm.model.make_train(

data=cg_rct_stacked, test_size=0.3, strat_var=['converted', 'ad'], random_state = 1234)

# Check the proportions of the training variable

cg_rct_stacked.training.value_counts(normalize=True)training

1.0 0.7

0.0 0.3

Name: proportion, dtype: float64| ad | 0 | 1 | ||

|---|---|---|---|---|

| training | 0.0 | 1.0 | 0.0 | 1.0 |

| converted | ||||

| yes | 512 | 1194 | 1174 | 2739 |

| no | 8488 | 19806 | 7826 | 18261 |

| ad | 0 | 1 | ||

|---|---|---|---|---|

| training | 0.0 | 1.0 | 0.0 | 1.0 |

| converted | ||||

| yes | 0.057 | 0.057 | 0.13 | 0.13 |

| no | 0.943 | 0.943 | 0.87 | 0.87 |

# Assign variables to evar

evar = [

"GameLevel",

"NumGameDays",

"NumGameDays4Plus",

"NumInGameMessagesSent",

"NumFriends",

"NumFriendRequestIgnored",

"NumSpaceHeroBadges",

"AcquiredSpaceship",

"AcquiredIonWeapon",

"TimesLostSpaceship",

"TimesKilled",

"TimesCaptain",

"TimesNavigator",

"PurchasedCoinPackSmall",

"PurchasedCoinPackLarge",

"NumAdsClicked",

"DaysUser",

"UserConsole",

"UserHasOldOS"

]Logistic regression (GLM)

Data : cg_rct_stacked

Response variable : converted

Level : yes

Explanatory variables: GameLevel, NumGameDays, NumGameDays4Plus, NumInGameMessagesSent, NumFriends, NumFriendRequestIgnored, NumSpaceHeroBadges, AcquiredSpaceship, AcquiredIonWeapon, TimesLostSpaceship, TimesKilled, TimesCaptain, TimesNavigator, PurchasedCoinPackSmall, PurchasedCoinPackLarge, NumAdsClicked, DaysUser, UserConsole, UserHasOldOS

Null hyp.: There is no effect of x on converted

Alt. hyp.: There is an effect of x on converted

OR OR% coefficient std.error z.value p.value

Intercept 0.030 -97.0% -3.52 0.122 -28.987 < .001 ***

AcquiredSpaceship[yes] 1.088 8.8% 0.08 0.049 1.732 0.083 .

AcquiredIonWeapon[yes] 0.917 -8.3% -0.09 0.164 -0.533 0.594

PurchasedCoinPackSmall[yes] 1.045 4.5% 0.04 0.046 0.960 0.337

PurchasedCoinPackLarge[yes] 1.211 21.1% 0.19 0.049 3.930 < .001 ***

UserConsole[yes] 0.945 -5.5% -0.06 0.058 -0.979 0.328

UserHasOldOS[yes] 0.799 -20.1% -0.22 0.081 -2.752 0.006 **

GameLevel 1.059 5.9% 0.06 0.009 6.399 < .001 ***

NumGameDays 1.015 1.5% 0.02 0.004 4.264 < .001 ***

NumGameDays4Plus 1.011 1.1% 0.01 0.006 1.674 0.094 .

NumInGameMessagesSent 1.000 0.0% 0.00 0.000 0.205 0.838

NumFriends 1.002 0.2% 0.00 0.000 9.255 < .001 ***

NumFriendRequestIgnored 1.000 -0.0% -0.00 0.001 -0.484 0.628

NumSpaceHeroBadges 1.028 2.8% 0.03 0.009 2.968 0.003 **

TimesLostSpaceship 0.993 -0.7% -0.01 0.002 -2.964 0.003 **

TimesKilled 1.001 0.1% 0.00 0.006 0.201 0.841

TimesCaptain 1.005 0.5% 0.01 0.002 2.054 0.04 *

TimesNavigator 1.001 0.1% 0.00 0.003 0.205 0.838

NumAdsClicked 1.094 9.4% 0.09 0.003 33.156 < .001 ***

DaysUser 1.000 -0.0% -0.00 0.000 -0.469 0.639

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Pseudo R-squared (McFadden): 0.096

Pseudo R-squared (McFadden adjusted): 0.094

Area under the RO Curve (AUC): 0.712

Log-likelihood: -7346.776, AIC: 14733.552, BIC: 14892.598

Chi-squared: 1568.873, df(19), p.value < 0.001

Nr obs: 21,000Logistic regression (GLM)

Data : cg_rct_stacked

Response variable : converted

Level : yes

Explanatory variables: GameLevel, NumGameDays, NumGameDays4Plus, NumInGameMessagesSent, NumFriends, NumFriendRequestIgnored, NumSpaceHeroBadges, AcquiredSpaceship, AcquiredIonWeapon, TimesLostSpaceship, TimesKilled, TimesCaptain, TimesNavigator, PurchasedCoinPackSmall, PurchasedCoinPackLarge, NumAdsClicked, DaysUser, UserConsole, UserHasOldOS

Null hyp.: There is no effect of x on converted

Alt. hyp.: There is an effect of x on converted

OR OR% coefficient std.error z.value p.value

Intercept 0.006 -99.4% -5.18 0.193 -26.809 < .001 ***

AcquiredSpaceship[yes] 1.594 59.4% 0.47 0.072 6.472 < .001 ***

AcquiredIonWeapon[yes] 0.860 -14.0% -0.15 0.267 -0.566 0.571

PurchasedCoinPackSmall[yes] 1.029 2.9% 0.03 0.069 0.415 0.678

PurchasedCoinPackLarge[yes] 1.338 33.8% 0.29 0.074 3.947 < .001 ***

UserConsole[yes] 1.148 14.8% 0.14 0.093 1.490 0.136

UserHasOldOS[yes] 0.832 -16.8% -0.18 0.124 -1.479 0.139

GameLevel 1.114 11.4% 0.11 0.014 7.527 < .001 ***

NumGameDays 1.033 3.3% 0.03 0.005 5.954 < .001 ***

NumGameDays4Plus 1.047 4.7% 0.05 0.008 5.538 < .001 ***

NumInGameMessagesSent 1.001 0.1% 0.00 0.000 3.311 < .001 ***

NumFriends 1.001 0.1% 0.00 0.000 4.809 < .001 ***

NumFriendRequestIgnored 0.989 -1.1% -0.01 0.001 -8.415 < .001 ***

NumSpaceHeroBadges 1.523 52.3% 0.42 0.013 32.587 < .001 ***

TimesLostSpaceship 0.946 -5.4% -0.06 0.006 -9.189 < .001 ***

TimesKilled 1.006 0.6% 0.01 0.005 1.103 0.27

TimesCaptain 0.998 -0.2% -0.00 0.003 -0.487 0.626

TimesNavigator 0.989 -1.1% -0.01 0.005 -2.365 0.018 *

NumAdsClicked 1.031 3.1% 0.03 0.004 8.114 < .001 ***

DaysUser 1.000 0.0% 0.00 0.000 2.335 0.02 *

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Pseudo R-squared (McFadden): 0.202

Pseudo R-squared (McFadden adjusted): 0.198

Area under the RO Curve (AUC): 0.831

Log-likelihood: -3656.883, AIC: 7353.766, BIC: 7512.812

Chi-squared: 1851.927, df(19), p.value < 0.001

Nr obs: 21,000Uplift Tab

| pred | bins | cum_prop | T_resp | T_n | C_resp | C_n | incremental_resp | inc_uplift | uplift | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | uplift_score | 1 | 0.05 | 197 | 450 | 70 | 634 | 147.315457 | 1.636838 | 0.327368 |

| 1 | uplift_score | 2 | 0.10 | 309 | 900 | 99 | 1182 | 233.619289 | 2.595770 | 0.195969 |

| 2 | uplift_score | 3 | 0.15 | 428 | 1350 | 125 | 1686 | 327.911032 | 3.643456 | 0.212857 |

| 3 | uplift_score | 4 | 0.20 | 528 | 1800 | 152 | 2175 | 402.206897 | 4.468966 | 0.167007 |

| 4 | uplift_score | 5 | 0.25 | 594 | 2250 | 166 | 2684 | 454.842027 | 5.053800 | 0.119162 |

| 5 | uplift_score | 6 | 0.30 | 642 | 2700 | 183 | 3150 | 485.142857 | 5.390476 | 0.070186 |

| 6 | uplift_score | 7 | 0.35 | 681 | 3150 | 195 | 3658 | 513.080372 | 5.700893 | 0.063045 |

| 7 | uplift_score | 8 | 0.40 | 719 | 3600 | 200 | 4127 | 544.539133 | 6.050435 | 0.073783 |

| 8 | uplift_score | 9 | 0.45 | 756 | 4050 | 210 | 4577 | 570.179594 | 6.335329 | 0.060000 |

| 9 | uplift_score | 10 | 0.50 | 791 | 4500 | 231 | 5076 | 586.212766 | 6.513475 | 0.035694 |

| 10 | uplift_score | 11 | 0.55 | 831 | 4950 | 249 | 5555 | 609.118812 | 6.767987 | 0.051311 |

| 11 | uplift_score | 12 | 0.60 | 859 | 5400 | 262 | 6031 | 624.412038 | 6.937912 | 0.034911 |

| 12 | uplift_score | 13 | 0.65 | 892 | 5850 | 275 | 6486 | 643.965772 | 7.155175 | 0.044762 |

| 13 | uplift_score | 14 | 0.70 | 937 | 6300 | 288 | 6938 | 675.483713 | 7.505375 | 0.071239 |

| 14 | uplift_score | 15 | 0.75 | 980 | 6750 | 298 | 7384 | 707.586674 | 7.862074 | 0.073134 |

| 15 | uplift_score | 16 | 0.80 | 1014 | 7200 | 312 | 7805 | 726.184497 | 8.068717 | 0.042301 |

| 16 | uplift_score | 17 | 0.85 | 1047 | 7650 | 319 | 8224 | 750.264835 | 8.336276 | 0.056627 |

| 17 | uplift_score | 18 | 0.90 | 1079 | 8100 | 360 | 8621 | 740.756177 | 8.230624 | -0.032163 |

| 18 | uplift_score | 19 | 0.95 | 1134 | 8550 | 432 | 8831 | 715.746122 | 7.952735 | -0.220635 |

| 19 | uplift_score | 20 | 1.00 | 1174 | 9000 | 512 | 9000 | 662.000000 | 7.355556 | -0.384484 |

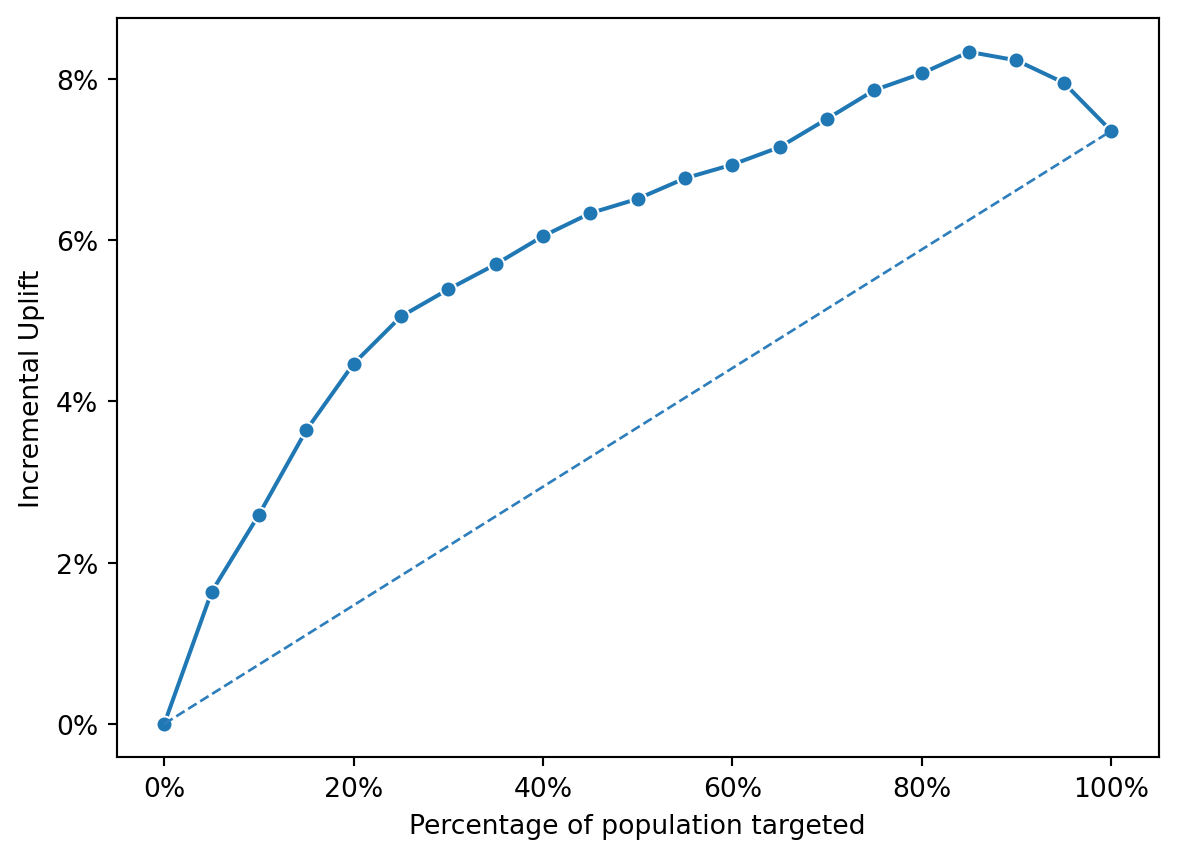

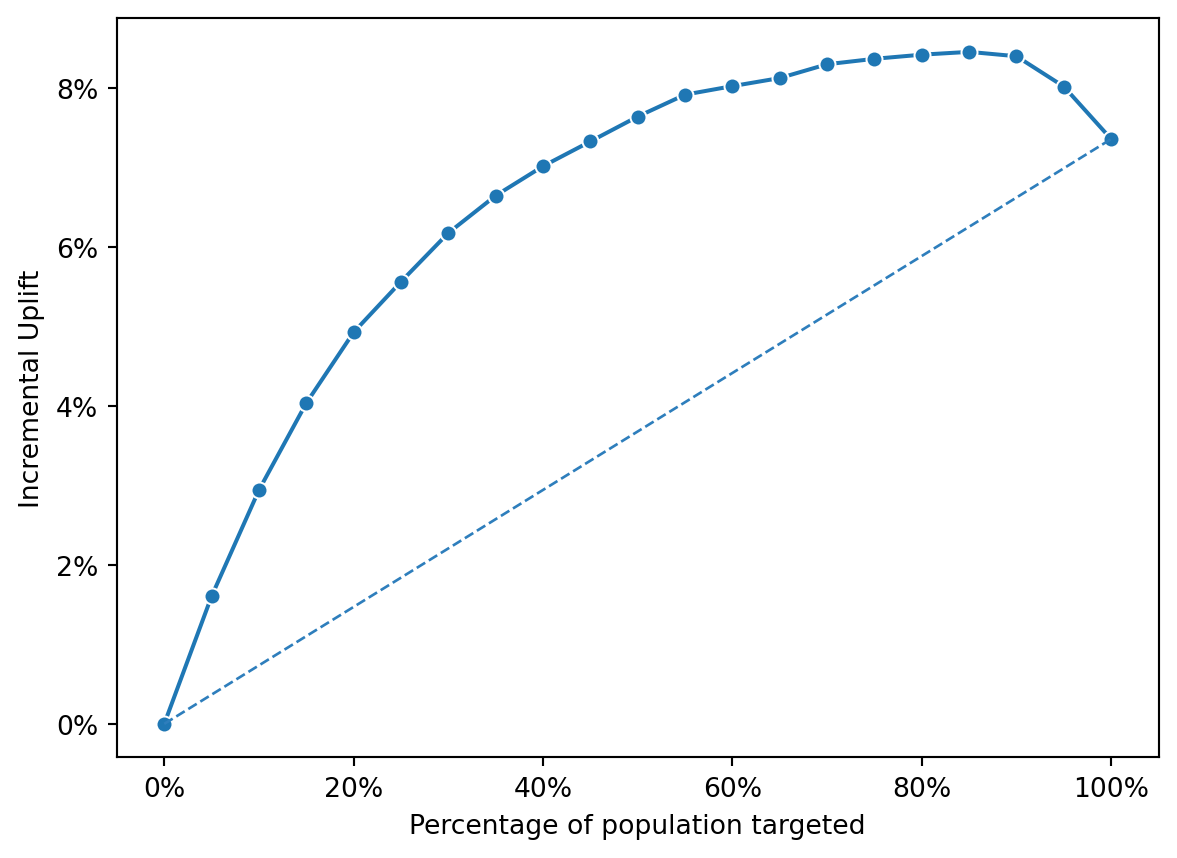

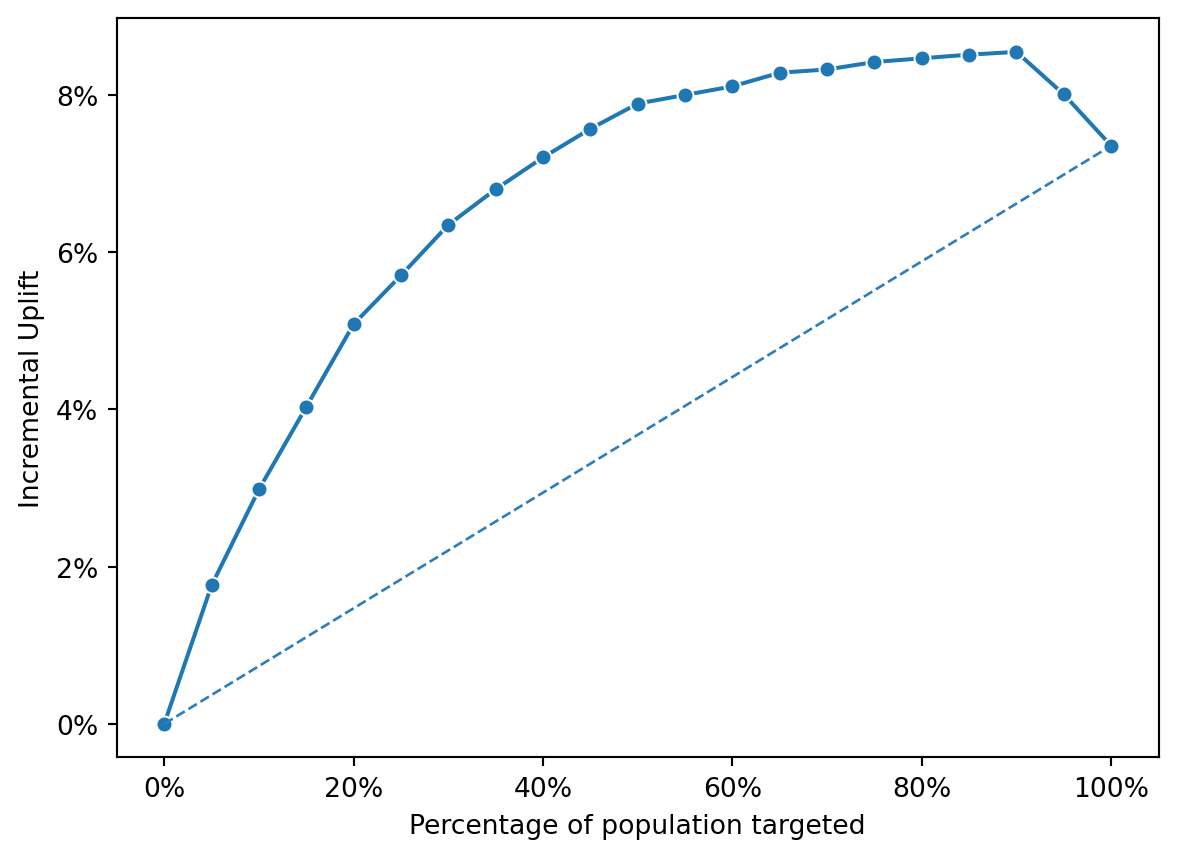

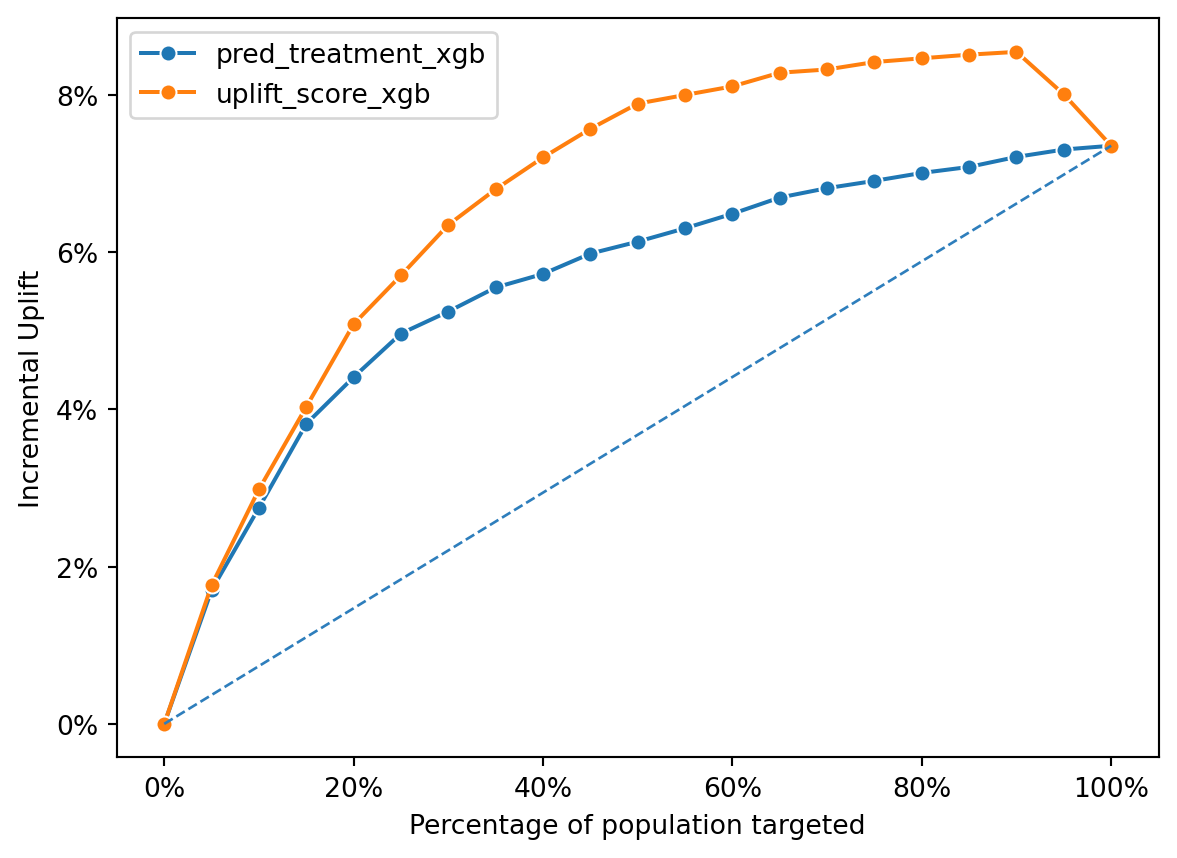

Gain Plot

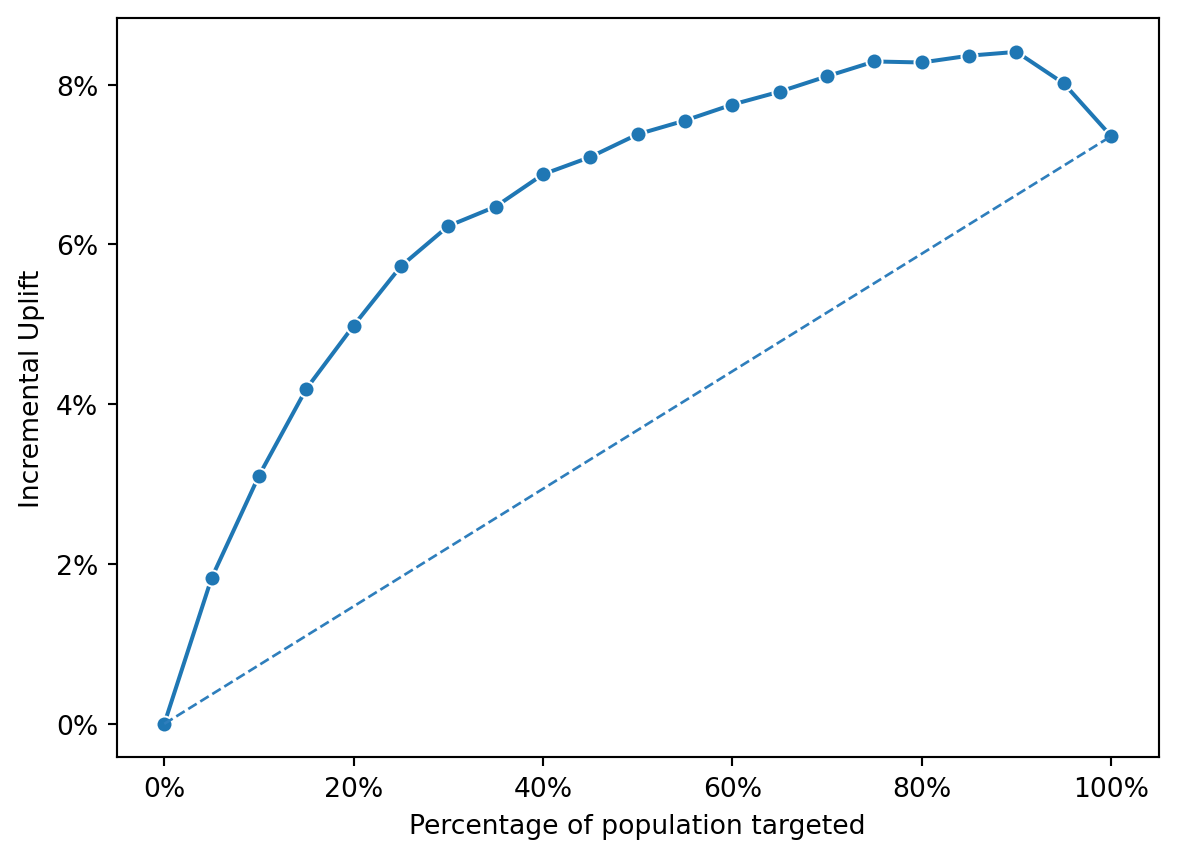

The curve starts at 0% uplift when 0% of the population is targeted (as expected, because no one has been exposed to the campaign).

As the percentage of the targeted population increases, the incremental uplift also increases, suggesting that targeting more of the population is yielding positive results.

The curve rises sharply at first, indicating that initially targeting the most responsive segments of the population yields significant uplift.

After reaching a peak (which seems to be just under 80% of the population targeted), the incremental uplift begins to plateau or decrease slightly, suggesting that beyond this point, targeting additional segments of the population adds less value or could potentially include less responsive or non-responsive individuals.

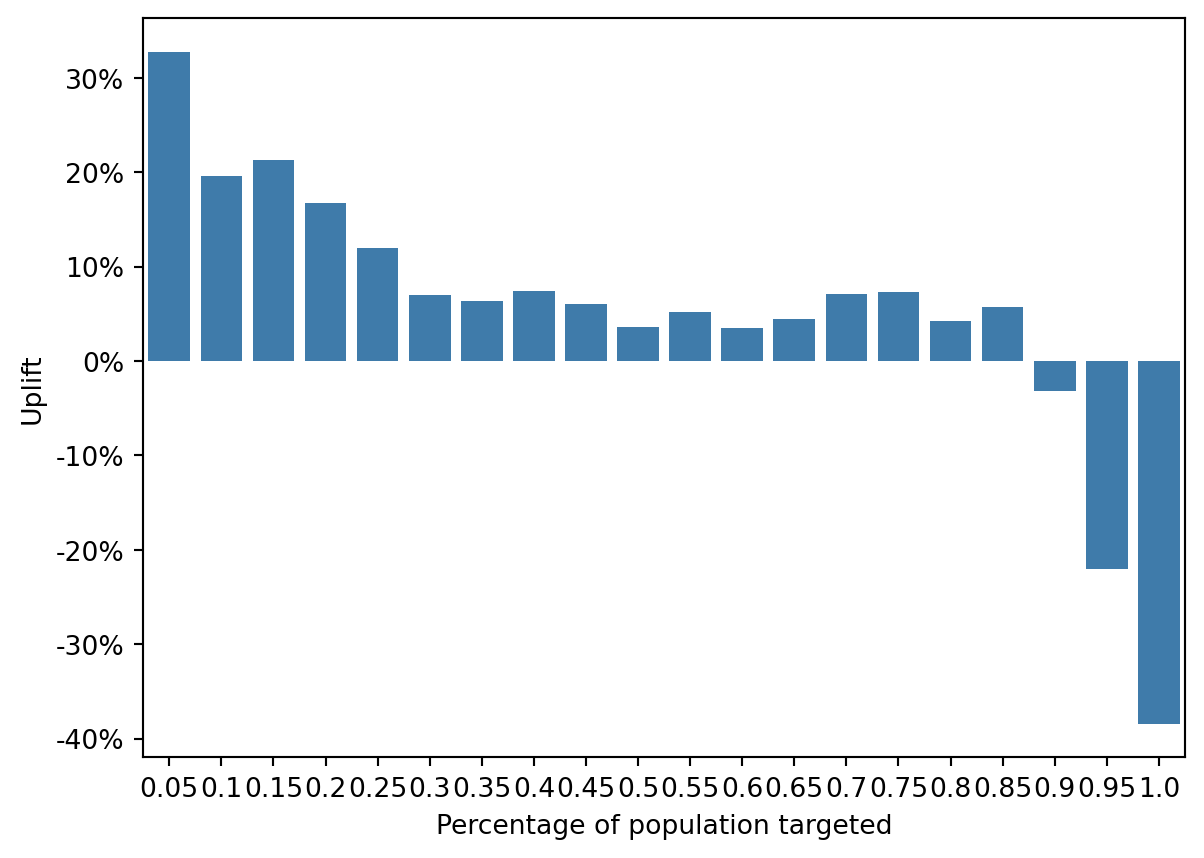

The first decile (the top 10% predicted to be most responsive) shows the highest uplift, above 20%.

The uplift decreases across subsequent deciles, which is consistent with the expectation that the first deciles contain the most responsive individuals.

There is a noticeable decline in uplift as we move to higher deciles. The uplift becomes negative in the last deciles, indicating that targeting these segments would result in worse outcomes than if they were not targeted at all.

Negative uplift in the later deciles could indicate that the campaign has a counterproductive effect on these individuals or that they would have been better or equally likely to take the desired action without the campaign intervention.

pred uplift_score

bins 5

cum_prop 0.25

T_resp 594

T_n 2250

C_resp 166

C_n 2684

incremental_resp 454.842027

inc_uplift 5.0538

uplift 0.119162

Name: 4, dtype: object# Define the function to calculate the profit

def prof_calc(data, price = 14.99, cost = 1.5):

# Given variables

target_customers = 30000

target_prop = 30000 / 120000

# Calculate the scale factor

scale_factor = 120000 / 9000

# Calculate the expected incremental customers and profits

target_row = data[data['cum_prop'] <= target_prop].iloc[-1]

profit = (price*target_row['incremental_resp'] - cost * target_row['T_n']) * scale_factor

return profit| pred | bins | cum_prop | T_resp | T_n | C_resp | C_n | incremental_resp | inc_uplift | uplift | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | pred_treatment | 1 | 0.05 | 204 | 450 | 80 | 603 | 144.298507 | 1.603317 | 0.320663 |

| 1 | pred_treatment | 2 | 0.10 | 326 | 900 | 112 | 1131 | 236.875332 | 2.631948 | 0.210505 |

| 2 | pred_treatment | 3 | 0.15 | 430 | 1350 | 159 | 1605 | 296.261682 | 3.291796 | 0.131955 |

| 3 | pred_treatment | 4 | 0.20 | 525 | 1800 | 206 | 1994 | 339.042126 | 3.767135 | 0.090288 |

| 4 | pred_treatment | 5 | 0.25 | 615 | 2250 | 239 | 2344 | 385.584471 | 4.284272 | 0.105714 |

| 5 | pred_treatment | 6 | 0.30 | 672 | 2700 | 285 | 2807 | 397.863912 | 4.420710 | 0.027315 |

| 6 | pred_treatment | 7 | 0.35 | 726 | 3150 | 316 | 3162 | 411.199241 | 4.568880 | 0.032676 |

| 7 | pred_treatment | 8 | 0.40 | 775 | 3600 | 336 | 3603 | 439.279767 | 4.880886 | 0.063537 |

| 8 | pred_treatment | 9 | 0.45 | 813 | 4050 | 361 | 4044 | 451.464392 | 5.016271 | 0.027755 |

| 9 | pred_treatment | 10 | 0.50 | 838 | 4500 | 386 | 4527 | 454.302187 | 5.047802 | 0.003796 |

| 10 | pred_treatment | 11 | 0.55 | 885 | 4950 | 409 | 4991 | 479.359848 | 5.326221 | 0.054875 |

| 11 | pred_treatment | 12 | 0.60 | 916 | 5400 | 436 | 5479 | 486.286549 | 5.403184 | 0.013561 |

| 12 | pred_treatment | 13 | 0.65 | 951 | 5850 | 455 | 5880 | 498.321429 | 5.536905 | 0.030396 |

| 13 | pred_treatment | 14 | 0.70 | 995 | 6300 | 464 | 6332 | 533.344915 | 5.926055 | 0.077866 |

| 14 | pred_treatment | 15 | 0.75 | 1028 | 6750 | 475 | 6793 | 556.006772 | 6.177853 | 0.049472 |

| 15 | pred_treatment | 16 | 0.80 | 1065 | 7200 | 483 | 7256 | 585.727674 | 6.508085 | 0.064944 |

| 16 | pred_treatment | 17 | 0.85 | 1091 | 7650 | 495 | 7649 | 595.935286 | 6.621503 | 0.027243 |

| 17 | pred_treatment | 18 | 0.90 | 1120 | 8100 | 499 | 8093 | 620.568392 | 6.895204 | 0.055435 |

| 18 | pred_treatment | 19 | 0.95 | 1148 | 8550 | 508 | 8571 | 641.244662 | 7.124941 | 0.043394 |

| 19 | pred_treatment | 20 | 1.00 | 1174 | 9000 | 512 | 9000 | 662.000000 | 7.355556 | 0.048454 |

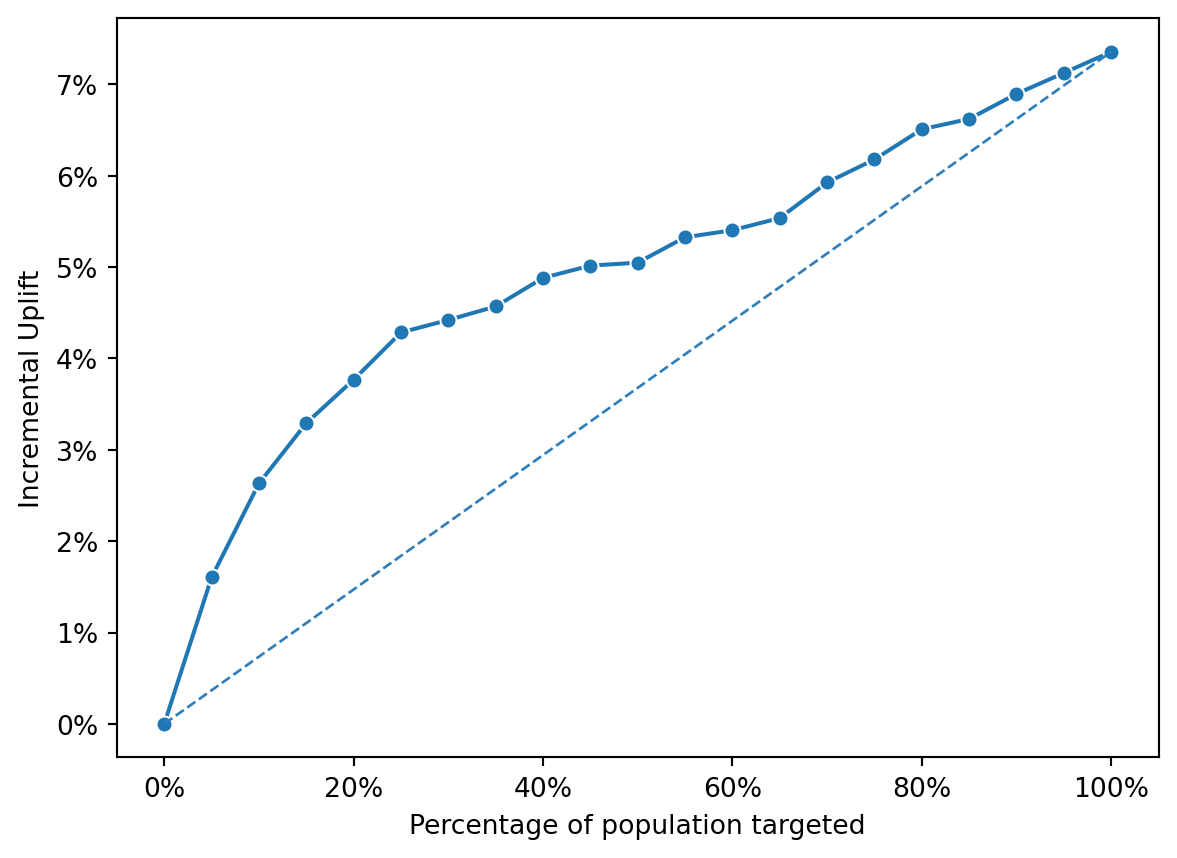

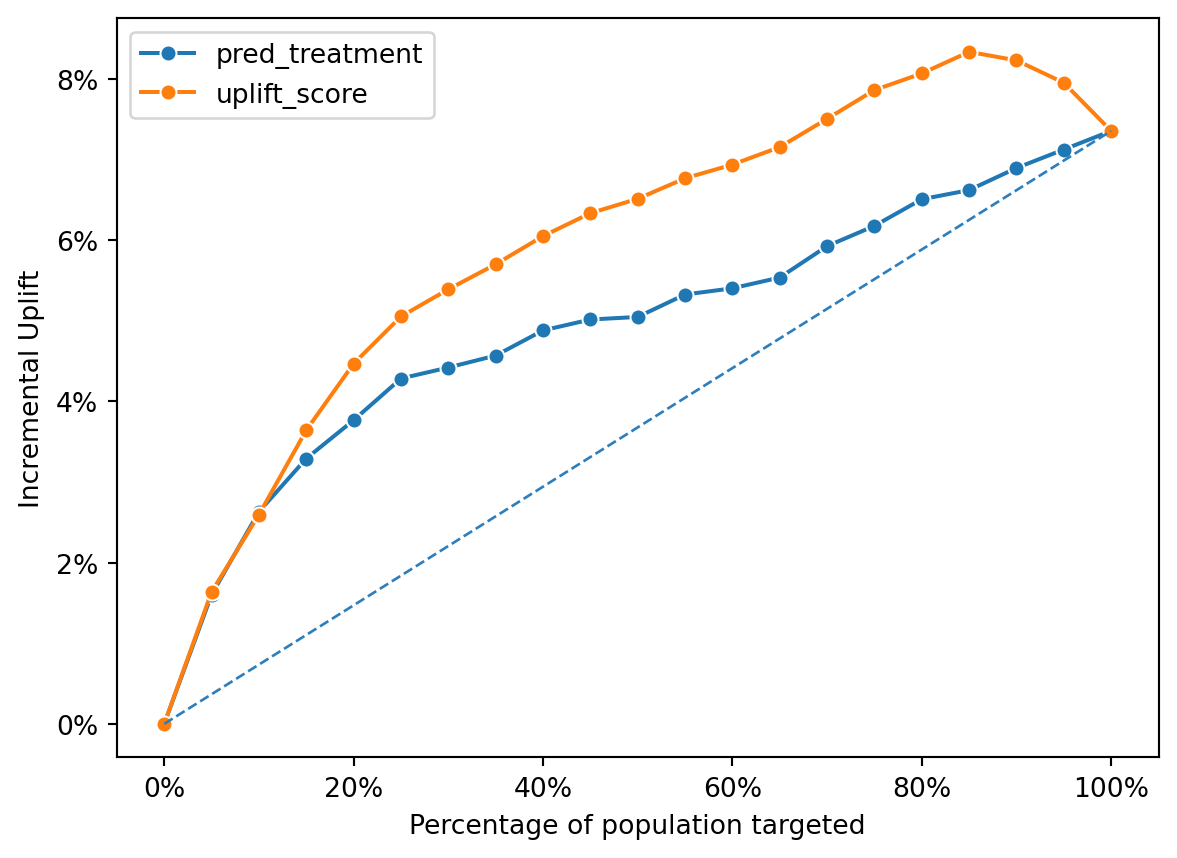

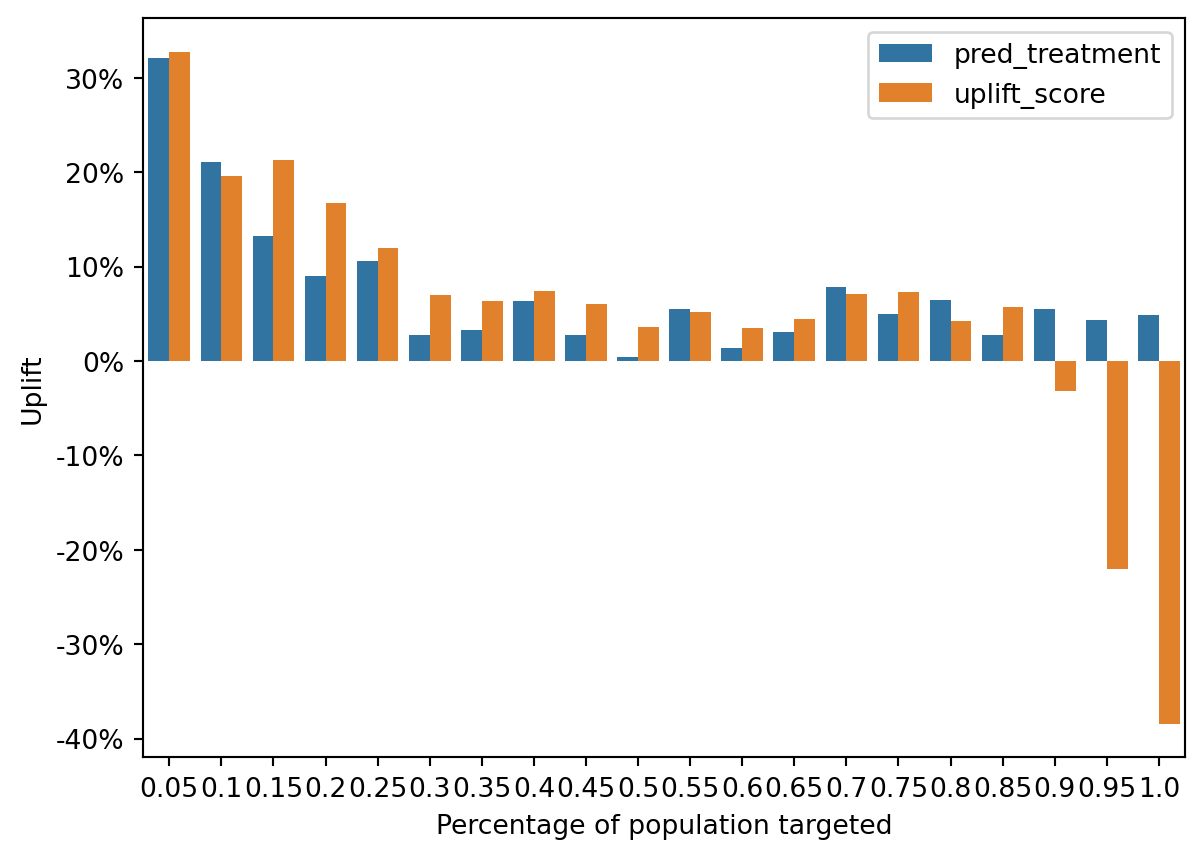

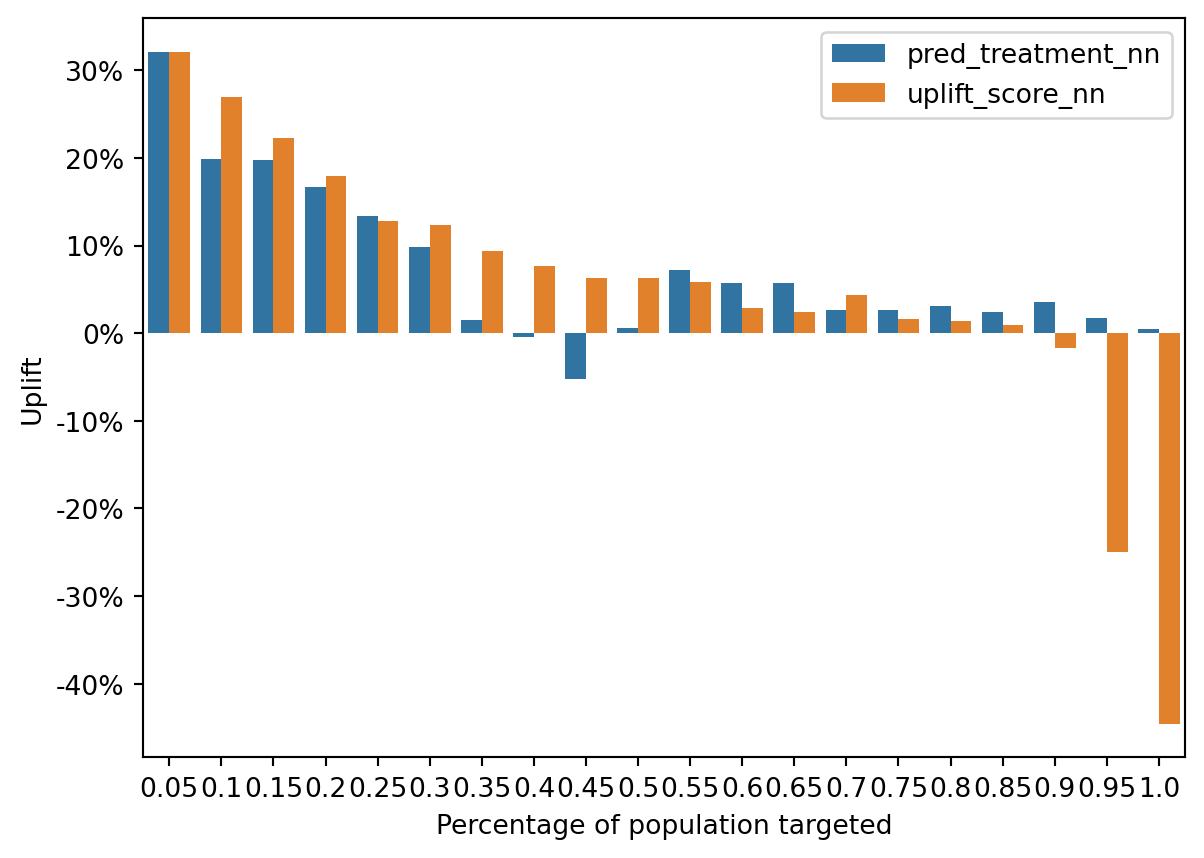

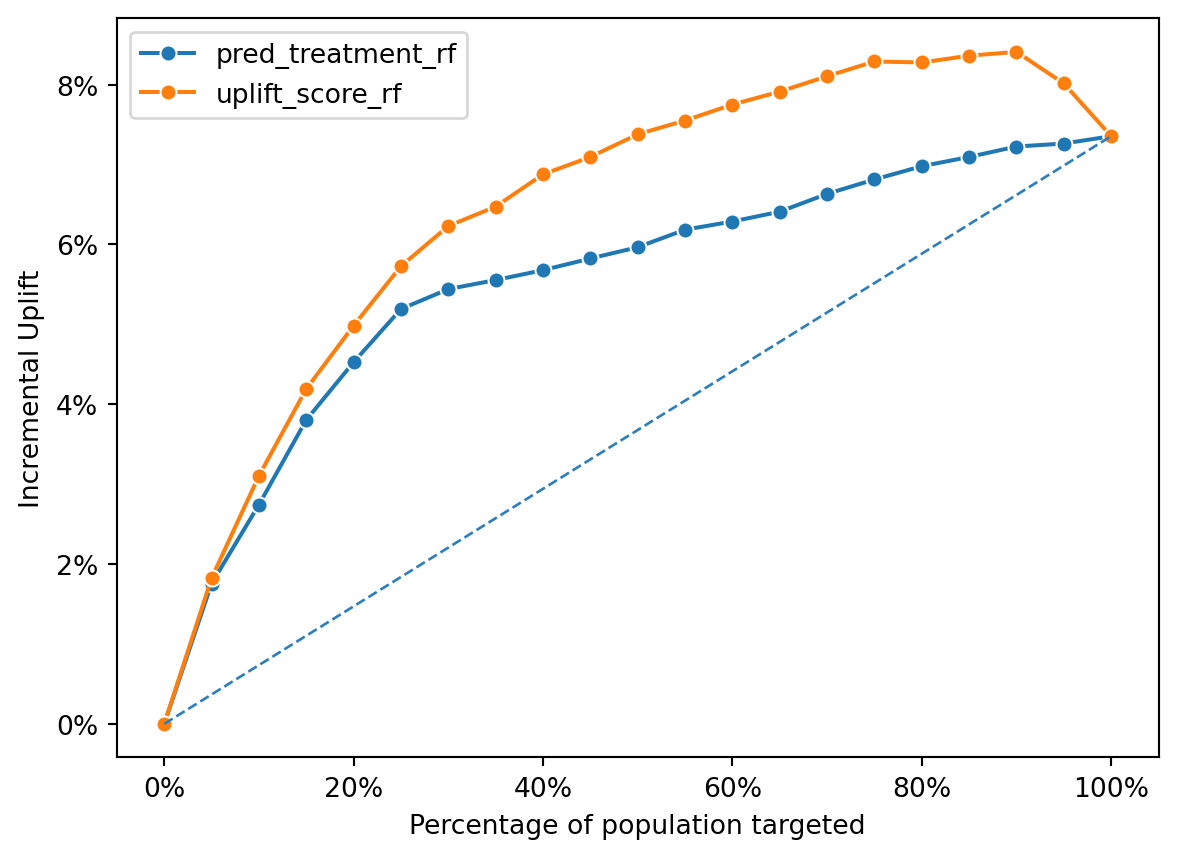

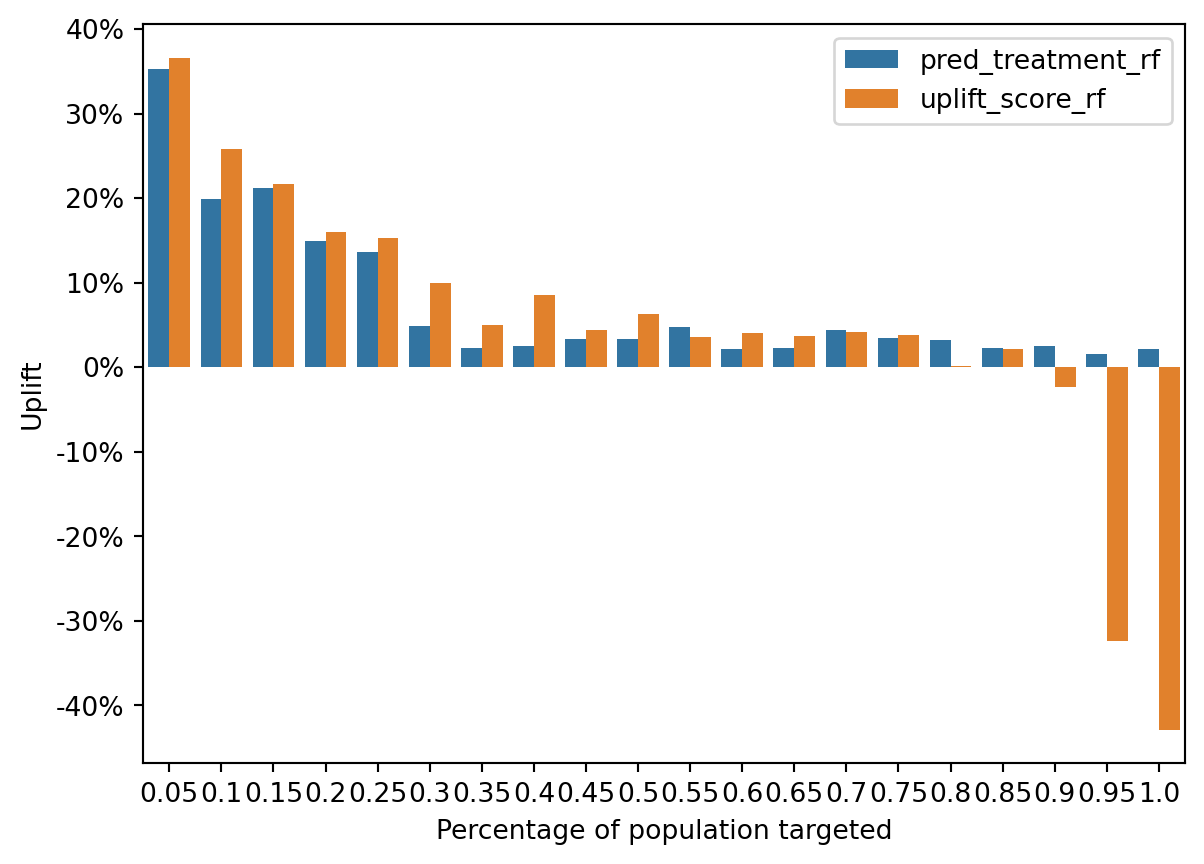

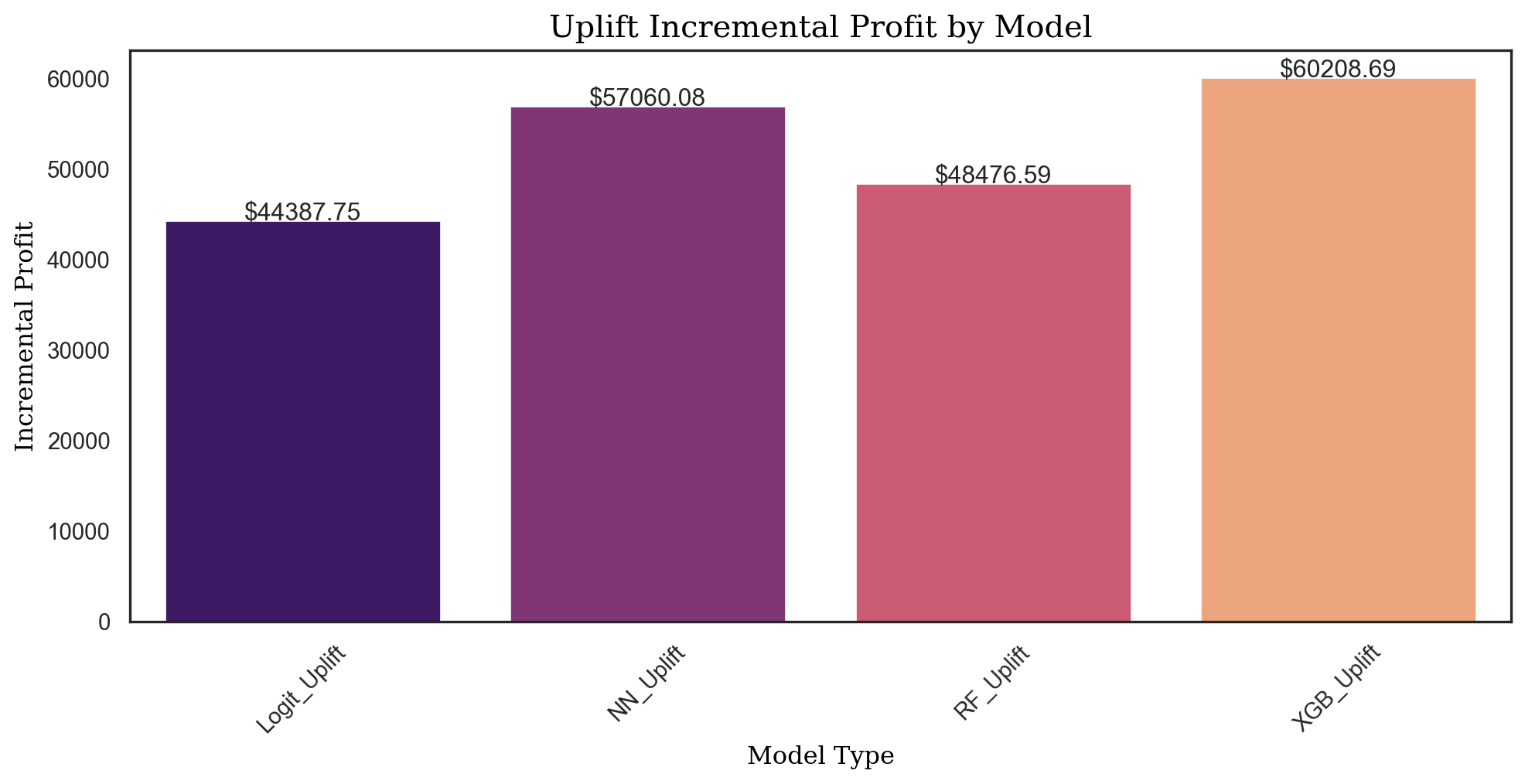

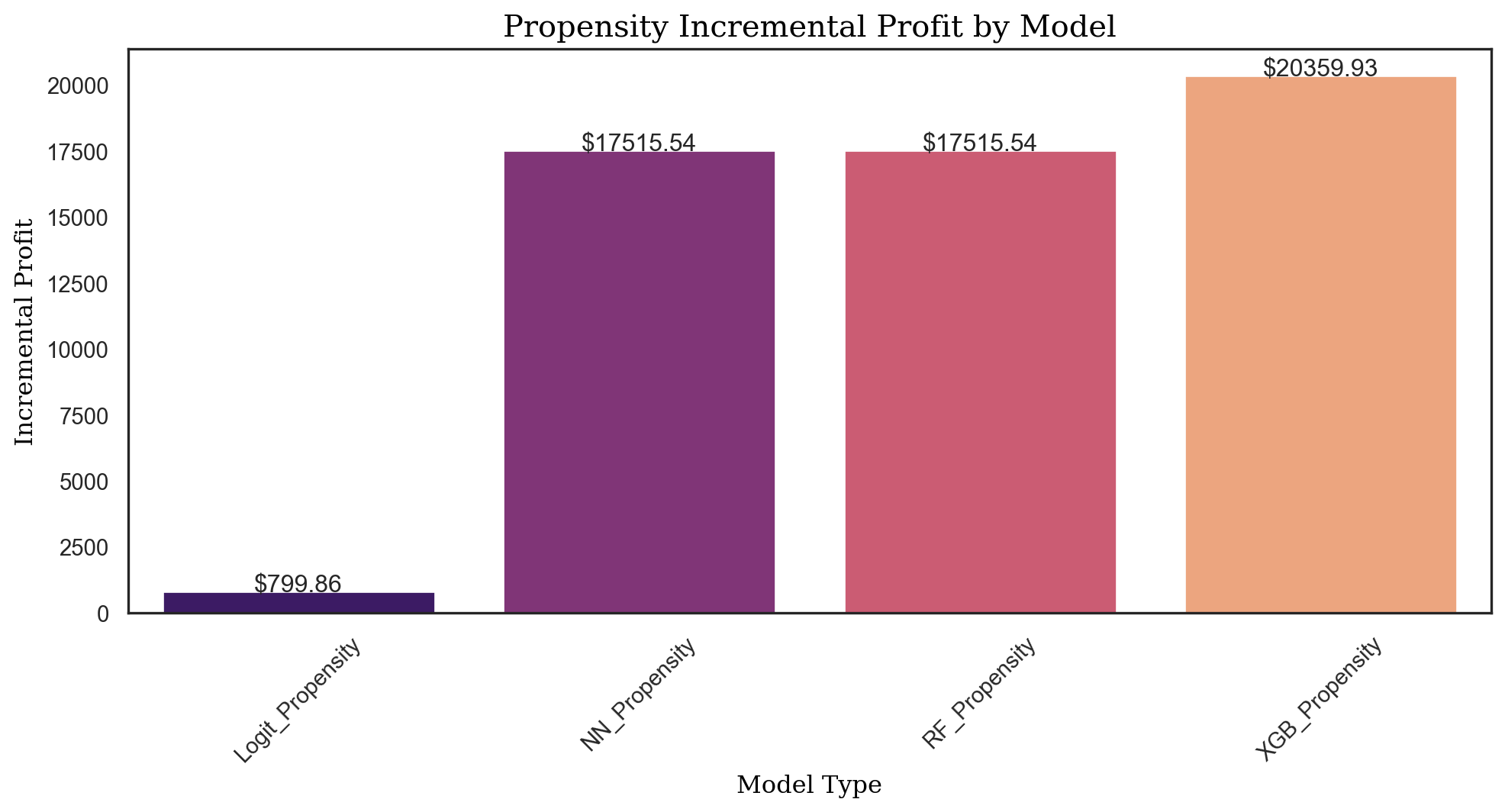

Uplift Model Performance: The line for the uplift_score generally lies above the line for the pred_treatment, indicating that the uplift model consistently provides a higher incremental uplift across the different percentages of the population targeted.

Diminishing Returns: Both lines show a trend of diminishing returns as more of the population is targeted, with the incremental uplift peaking and then plateauing or slightly decreasing, suggesting an optimal targeting point before 100%.

Comparison: The propensity model appears to perform better than random targeting (which would be a straight line from the origin), but the uplift model is more effective in achieving incremental gains. This suggests that while the propensity model can identify likely responders, the uplift model is better at identifying those for whom the treatment would make a difference in their behavior.

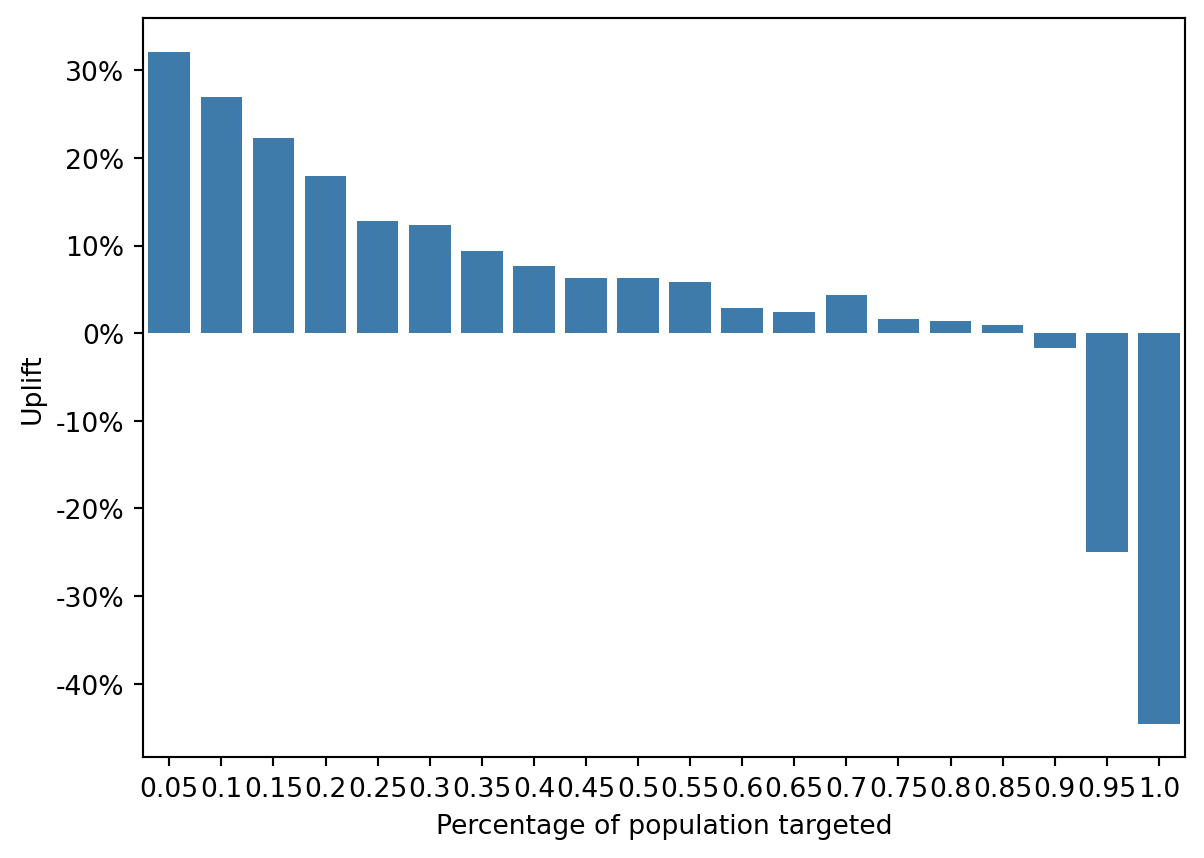

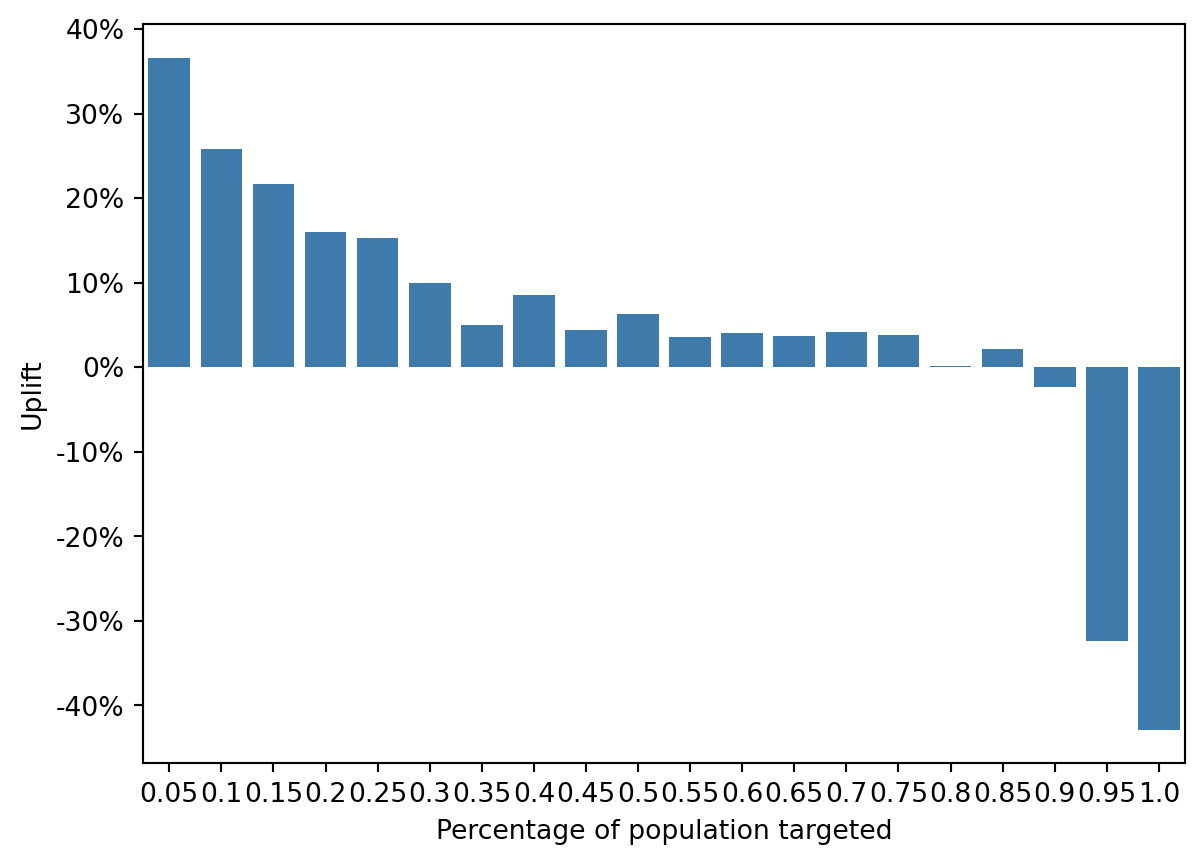

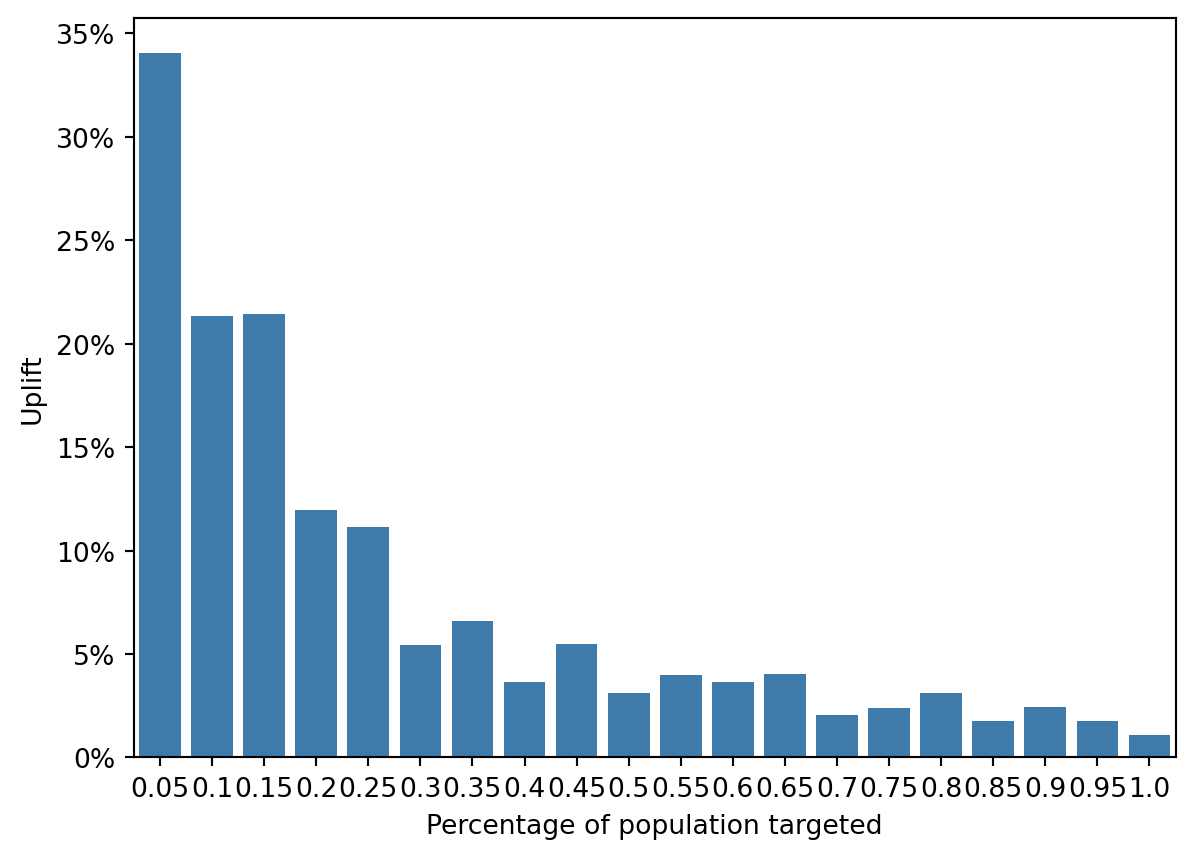

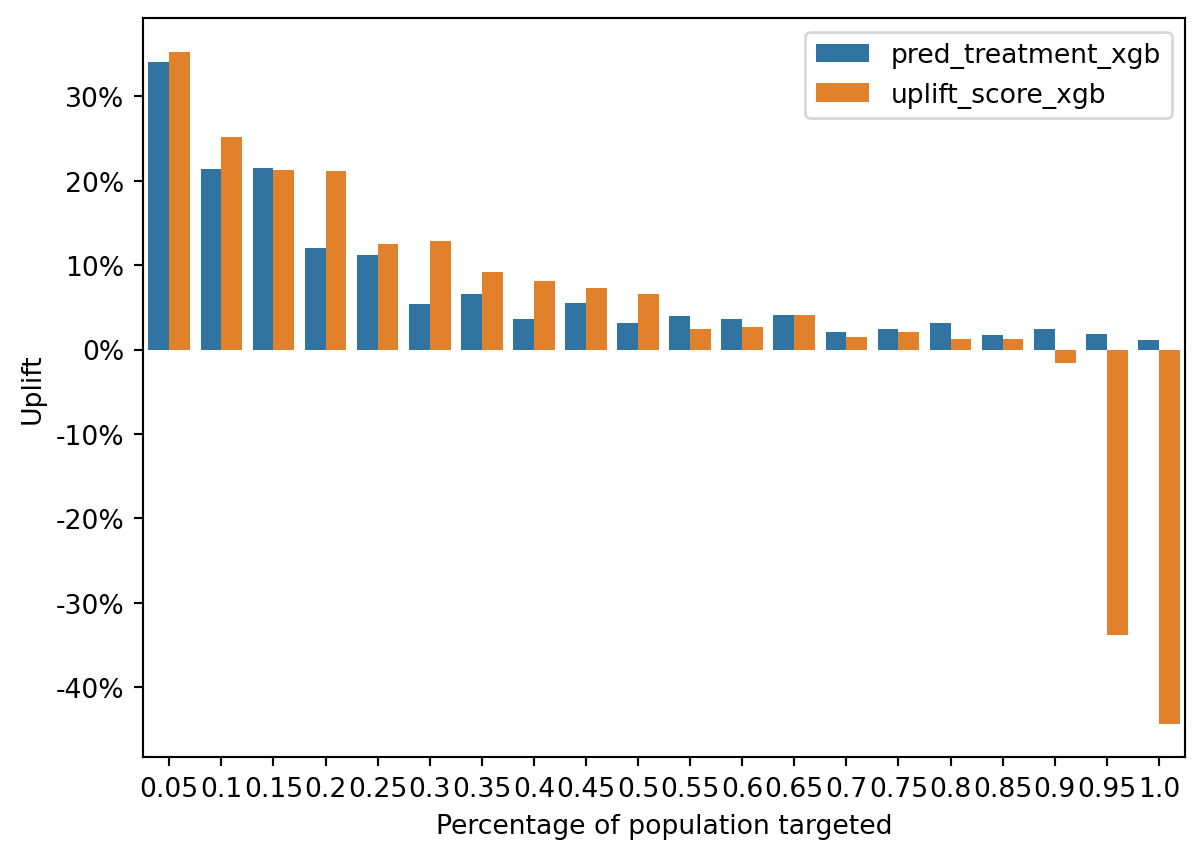

Uplift Distribution: Both sets of bars show a decrease in uplift as we move through the population segments, which is expected as the most responsive individuals are often targeted first.

Model Comparison: In some segments, the uplift_score bars are higher than the pred_treatment bars, reinforcing the idea that the uplift model is more effective in certain segments.

Negative Uplift: Towards the latter segments, both models show negative uplift, but the uplift_score model tends to have less severe negative values. The uplift model places customers with high incrementality in earlier deciles. The incrementality is lower for propensity model because it targets Persuadables and Sure Things whereas the uplift model targets only the former. This suggests that the uplift model may be better at minimizing the risk of targeting individuals who would have a negative response to the treatment.

That said, the propensity model still performs well here; this is because the customers who have the best propensity also tend to have the best uplift in this data:

Correlation

Data : cg_rct_stacked

Method : pearson

Cutoff : 0

Variables: pred_treatment, pred_control, uplift_score

Null hyp.: variables x and y are not correlated

Alt. hyp.: variables x and y are correlated

Correlation matrix:

pred_treatment pred_control

pred_control 0.28

uplift_score 0.55 -0.65

p.values:

pred_treatment pred_control

pred_control 0.0

uplift_score 0.0 0.0The positive correlation between pred_treatment and uplift_score is in line with what we would expect, as a higher predicted treatment response should correspond with a higher uplift score. The negative correlation between pred_control and uplift_score suggests that individuals who are likely to respond without any intervention (as predicted by the control model) are properly being identified as not contributing to uplift, which is a desirable feature of a good uplift model.

32065.482935153585Multi-layer Perceptron (NN)

Data : cg_rct_stacked

Response variable : converted

Level : yes

Explanatory variables: GameLevel, NumGameDays, NumGameDays4Plus, NumInGameMessagesSent, NumFriends, NumFriendRequestIgnored, NumSpaceHeroBadges, AcquiredSpaceship, AcquiredIonWeapon, TimesLostSpaceship, TimesKilled, TimesCaptain, TimesNavigator, PurchasedCoinPackSmall, PurchasedCoinPackLarge, NumAdsClicked, DaysUser, UserConsole, UserHasOldOS

Model type : classification

Nr. of features : (19, 19)

Nr. of observations : 21,000

Hidden_layer_sizes : (1,)

Activation function : tanh

Solver : lbfgs

Alpha : 0.1

Batch size : auto

Learning rate : 0.001

Maximum iterations : 10000

random_state : 1234

AUC : 0.712

Raw data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges AcquiredSpaceship AcquiredIonWeapon TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator PurchasedCoinPackSmall PurchasedCoinPackLarge NumAdsClicked DaysUser UserConsole UserHasOldOS

5 15 0 179 362 50 0 yes no 22 0 4 4 no no 2 1308 yes no

4 4 0 36 0 0 0 no no 0 0 0 0 no no 2 2922 yes no

8 17 0 222 20 63 10 yes no 10 0 9 6 yes no 4 2192 yes no

10 18 2 0 56 6 2 no no 1 0 0 0 no yes 13 2313 yes no

10 20 5 36 0 16 0 no no 0 0 0 0 no yes 9 1766 yes no

Estimation data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator NumAdsClicked DaysUser AcquiredSpaceship_yes AcquiredIonWeapon_yes PurchasedCoinPackSmall_yes PurchasedCoinPackLarge_yes UserConsole_yes UserHasOldOS_yes

-0.480555 0.361225 -0.411200 0.968856 3.186178 0.577047 -0.371464 1.269286 -0.081624 0.347884 0.390873 -0.988082 -1.999414 True False False False True False

-0.842392 -1.185248 -0.411200 -0.360274 -0.525098 -0.876726 -0.371464 -0.301827 -0.081624 -0.205861 -0.201936 -0.988082 0.439831 False False False False True False

0.604958 0.642403 -0.411200 1.368525 -0.320055 0.955027 4.091205 0.412315 -0.081624 1.040065 0.687277 -0.695079 -0.663421 True False True False True False

1.328633 0.782991 0.170373 -0.694880 0.049022 -0.702273 0.521070 -0.230413 -0.081624 -0.205861 -0.201936 0.623433 -0.480554 False False False True True False

1.328633 1.064168 1.042734 -0.360274 -0.525098 -0.411519 -0.371464 -0.301827 -0.081624 -0.205861 -0.201936 0.037428 -1.307237 False False False True True Falsehls = [(1,), (2,), (3,), (3, 3), (4, 2), (5, 5), (5,), (10,), (5,10), (10,5)]

alpha = [0.0001, 0.001, 0.01, 0.1, 1]

param_grid = {"hidden_layer_sizes": hls, "alpha": alpha}

scoring = {"AUC": "roc_auc"}

clf_cv_treatment = GridSearchCV(

clf_treatment.fitted, param_grid, scoring=scoring, cv=5, n_jobs=4, refit="AUC", verbose=5

)Fitting 5 folds for each of 50 candidates, totalling 250 fits/Users/duyentran/Library/Python/3.11/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:546: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

self.n_iter_ = _check_optimize_result("lbfgs", opt_res, self.max_iter)

/Users/duyentran/Library/Python/3.11/lib/python/site-packages/sklearn/neural_network/_multilayer_perceptron.py:546: ConvergenceWarning: lbfgs failed to converge (status=1):

STOP: TOTAL NO. of ITERATIONS REACHED LIMIT.

Increase the number of iterations (max_iter) or scale the data as shown in:

https://scikit-learn.org/stable/modules/preprocessing.html

self.n_iter_ = _check_optimize_result("lbfgs", opt_res, self.max_iter)GridSearchCV(cv=5,

estimator=MLPClassifier(activation='tanh', alpha=0.1,

hidden_layer_sizes=(1,), max_iter=10000,

random_state=1234, solver='lbfgs'),

n_jobs=4,

param_grid={'alpha': [0.0001, 0.001, 0.01, 0.1, 1],

'hidden_layer_sizes': [(1,), (2,), (3,), (3, 3),

(4, 2), (5, 5), (5,), (10,),

(5, 10), (10, 5)]},

refit='AUC', scoring={'AUC': 'roc_auc'}, verbose=5)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. GridSearchCV(cv=5,

estimator=MLPClassifier(activation='tanh', alpha=0.1,

hidden_layer_sizes=(1,), max_iter=10000,

random_state=1234, solver='lbfgs'),

n_jobs=4,

param_grid={'alpha': [0.0001, 0.001, 0.01, 0.1, 1],

'hidden_layer_sizes': [(1,), (2,), (3,), (3, 3),

(4, 2), (5, 5), (5,), (10,),

(5, 10), (10, 5)]},

refit='AUC', scoring={'AUC': 'roc_auc'}, verbose=5)MLPClassifier(activation='tanh', alpha=0.1, hidden_layer_sizes=(1,),

max_iter=10000, random_state=1234, solver='lbfgs')MLPClassifier(activation='tanh', alpha=0.1, hidden_layer_sizes=(1,),

max_iter=10000, random_state=1234, solver='lbfgs')Multi-layer Perceptron (NN)

Data : cg_rct_stacked

Response variable : converted

Level : yes

Explanatory variables: GameLevel, NumGameDays, NumGameDays4Plus, NumInGameMessagesSent, NumFriends, NumFriendRequestIgnored, NumSpaceHeroBadges, AcquiredSpaceship, AcquiredIonWeapon, TimesLostSpaceship, TimesKilled, TimesCaptain, TimesNavigator, PurchasedCoinPackSmall, PurchasedCoinPackLarge, NumAdsClicked, DaysUser, UserConsole, UserHasOldOS

Model type : classification

Nr. of features : (19, 19)

Nr. of observations : 21,000

Hidden_layer_sizes : (4, 2)

Activation function : tanh

Solver : lbfgs

Alpha : 0.0001

Batch size : auto

Learning rate : 0.001

Maximum iterations : 10000

random_state : 1234

AUC : 0.792

Raw data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges AcquiredSpaceship AcquiredIonWeapon TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator PurchasedCoinPackSmall PurchasedCoinPackLarge NumAdsClicked DaysUser UserConsole UserHasOldOS

5 15 0 179 362 50 0 yes no 22 0 4 4 no no 2 1308 yes no

4 4 0 36 0 0 0 no no 0 0 0 0 no no 2 2922 yes no

8 17 0 222 20 63 10 yes no 10 0 9 6 yes no 4 2192 yes no

10 18 2 0 56 6 2 no no 1 0 0 0 no yes 13 2313 yes no

10 20 5 36 0 16 0 no no 0 0 0 0 no yes 9 1766 yes no

Estimation data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator NumAdsClicked DaysUser AcquiredSpaceship_yes AcquiredIonWeapon_yes PurchasedCoinPackSmall_yes PurchasedCoinPackLarge_yes UserConsole_yes UserHasOldOS_yes

-0.480555 0.361225 -0.411200 0.968856 3.186178 0.577047 -0.371464 1.269286 -0.081624 0.347884 0.390873 -0.988082 -1.999414 True False False False True False

-0.842392 -1.185248 -0.411200 -0.360274 -0.525098 -0.876726 -0.371464 -0.301827 -0.081624 -0.205861 -0.201936 -0.988082 0.439831 False False False False True False

0.604958 0.642403 -0.411200 1.368525 -0.320055 0.955027 4.091205 0.412315 -0.081624 1.040065 0.687277 -0.695079 -0.663421 True False True False True False

1.328633 0.782991 0.170373 -0.694880 0.049022 -0.702273 0.521070 -0.230413 -0.081624 -0.205861 -0.201936 0.623433 -0.480554 False False False True True False

1.328633 1.064168 1.042734 -0.360274 -0.525098 -0.411519 -0.371464 -0.301827 -0.081624 -0.205861 -0.201936 0.037428 -1.307237 False False False True True FalseMulti-layer Perceptron (NN)

Data : cg_rct_stacked

Response variable : converted

Level : yes

Explanatory variables: GameLevel, NumGameDays, NumGameDays4Plus, NumInGameMessagesSent, NumFriends, NumFriendRequestIgnored, NumSpaceHeroBadges, AcquiredSpaceship, AcquiredIonWeapon, TimesLostSpaceship, TimesKilled, TimesCaptain, TimesNavigator, PurchasedCoinPackSmall, PurchasedCoinPackLarge, NumAdsClicked, DaysUser, UserConsole, UserHasOldOS

Model type : classification

Nr. of features : (19, 19)

Nr. of observations : 21,000

Hidden_layer_sizes : (1,)

Activation function : tanh

Solver : lbfgs

Alpha : 0.0001

Batch size : auto

Learning rate : 0.001

Maximum iterations : 10000

random_state : 1234

AUC : 0.841

Raw data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges AcquiredSpaceship AcquiredIonWeapon TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator PurchasedCoinPackSmall PurchasedCoinPackLarge NumAdsClicked DaysUser UserConsole UserHasOldOS

7 18 0 124 0 81 0 yes no 8 0 0 4 no yes 3 2101 no no

10 3 2 60 479 18 0 no no 10 7 0 0 yes no 7 1644 yes no

2 1 0 0 0 0 0 no no 0 0 0 2 no no 8 3197 yes yes

8 15 0 0 51 6 0 yes no 0 0 2 1 yes no 21 2009 yes no

10 18 0 0 0 0 0 no no 0 0 0 0 yes no 6 3288 yes no

Estimation data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator NumAdsClicked DaysUser AcquiredSpaceship_yes AcquiredIonWeapon_yes PurchasedCoinPackSmall_yes PurchasedCoinPackLarge_yes UserConsole_yes UserHasOldOS_yes

0.283862 0.806405 -0.399092 0.485069 -0.513099 1.509762 -0.29461 0.343880 -0.075167 -0.180445 0.397524 -0.868677 -0.787337 True False False True False False

1.373047 -1.292688 0.204178 -0.119399 4.411754 -0.342325 -0.29461 0.527329 1.452288 -0.180445 -0.209504 -0.333738 -1.475407 False False True False True False

-1.531446 -1.572567 -0.399092 -0.686088 -0.513099 -0.871493 -0.29461 -0.389917 -0.075167 -0.180445 0.094010 -0.200003 0.862827 False False False False True True

0.646924 0.386586 -0.399092 -0.686088 0.011259 -0.695104 -0.29461 -0.389917 -0.075167 0.052946 -0.057747 1.538548 -0.925854 True False True False True False

1.373047 0.806405 -0.399092 -0.686088 -0.513099 -0.871493 -0.29461 -0.389917 -0.075167 -0.180445 -0.209504 -0.467473 0.999839 False False True False True FalseFitting 5 folds for each of 50 candidates, totalling 250 fitsGridSearchCV(cv=5,

estimator=MLPClassifier(activation='tanh', hidden_layer_sizes=(1,),

max_iter=10000, random_state=1234,

solver='lbfgs'),

n_jobs=4,

param_grid={'alpha': [0.0001, 0.001, 0.01, 0.1, 1],

'hidden_layer_sizes': [(1,), (2,), (3,), (3, 3),

(4, 2), (5, 5), (5,), (10,),

(5, 10), (10, 5)]},

refit='AUC', scoring={'AUC': 'roc_auc'}, verbose=5)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. GridSearchCV(cv=5,

estimator=MLPClassifier(activation='tanh', hidden_layer_sizes=(1,),

max_iter=10000, random_state=1234,

solver='lbfgs'),

n_jobs=4,

param_grid={'alpha': [0.0001, 0.001, 0.01, 0.1, 1],

'hidden_layer_sizes': [(1,), (2,), (3,), (3, 3),

(4, 2), (5, 5), (5,), (10,),

(5, 10), (10, 5)]},

refit='AUC', scoring={'AUC': 'roc_auc'}, verbose=5)MLPClassifier(activation='tanh', hidden_layer_sizes=(1,), max_iter=10000,

random_state=1234, solver='lbfgs')MLPClassifier(activation='tanh', hidden_layer_sizes=(1,), max_iter=10000,

random_state=1234, solver='lbfgs')Multi-layer Perceptron (NN)

Data : cg_rct_stacked

Response variable : converted

Level : yes

Explanatory variables: GameLevel, NumGameDays, NumGameDays4Plus, NumInGameMessagesSent, NumFriends, NumFriendRequestIgnored, NumSpaceHeroBadges, AcquiredSpaceship, AcquiredIonWeapon, TimesLostSpaceship, TimesKilled, TimesCaptain, TimesNavigator, PurchasedCoinPackSmall, PurchasedCoinPackLarge, NumAdsClicked, DaysUser, UserConsole, UserHasOldOS

Model type : classification

Nr. of features : (19, 19)

Nr. of observations : 21,000

Hidden_layer_sizes : (3, 3)

Activation function : tanh

Solver : lbfgs

Alpha : 1

Batch size : auto

Learning rate : 0.001

Maximum iterations : 10000

random_state : 1234

AUC : 0.861

Raw data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges AcquiredSpaceship AcquiredIonWeapon TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator PurchasedCoinPackSmall PurchasedCoinPackLarge NumAdsClicked DaysUser UserConsole UserHasOldOS

7 18 0 124 0 81 0 yes no 8 0 0 4 no yes 3 2101 no no

10 3 2 60 479 18 0 no no 10 7 0 0 yes no 7 1644 yes no

2 1 0 0 0 0 0 no no 0 0 0 2 no no 8 3197 yes yes

8 15 0 0 51 6 0 yes no 0 0 2 1 yes no 21 2009 yes no

10 18 0 0 0 0 0 no no 0 0 0 0 yes no 6 3288 yes no

Estimation data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator NumAdsClicked DaysUser AcquiredSpaceship_yes AcquiredIonWeapon_yes PurchasedCoinPackSmall_yes PurchasedCoinPackLarge_yes UserConsole_yes UserHasOldOS_yes

0.283862 0.806405 -0.399092 0.485069 -0.513099 1.509762 -0.29461 0.343880 -0.075167 -0.180445 0.397524 -0.868677 -0.787337 True False False True False False

1.373047 -1.292688 0.204178 -0.119399 4.411754 -0.342325 -0.29461 0.527329 1.452288 -0.180445 -0.209504 -0.333738 -1.475407 False False True False True False

-1.531446 -1.572567 -0.399092 -0.686088 -0.513099 -0.871493 -0.29461 -0.389917 -0.075167 -0.180445 0.094010 -0.200003 0.862827 False False False False True True

0.646924 0.386586 -0.399092 -0.686088 0.011259 -0.695104 -0.29461 -0.389917 -0.075167 0.052946 -0.057747 1.538548 -0.925854 True False True False True False

1.373047 0.806405 -0.399092 -0.686088 -0.513099 -0.871493 -0.29461 -0.389917 -0.075167 -0.180445 -0.209504 -0.467473 0.999839 False False True False True False| pred | bins | cum_prop | T_resp | T_n | C_resp | C_n | incremental_resp | inc_uplift | uplift | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | uplift_score_nn | 1 | 0.05 | 198 | 450 | 71 | 597 | 144.482412 | 1.605360 | 0.321072 |

| 1 | uplift_score_nn | 2 | 0.10 | 354 | 900 | 113 | 1138 | 264.632689 | 2.940363 | 0.269033 |

| 2 | uplift_score_nn | 3 | 0.15 | 476 | 1350 | 138 | 1650 | 363.090909 | 4.034343 | 0.222283 |

| 3 | uplift_score_nn | 4 | 0.20 | 588 | 1800 | 174 | 2164 | 443.268022 | 4.925200 | 0.178850 |

| 4 | uplift_score_nn | 5 | 0.25 | 673 | 2250 | 204 | 2661 | 500.508455 | 5.561205 | 0.128527 |

| 5 | uplift_score_nn | 6 | 0.30 | 754 | 2700 | 233 | 3172 | 555.670870 | 6.174121 | 0.123249 |

| 6 | uplift_score_nn | 7 | 0.35 | 811 | 3150 | 250 | 3696 | 597.931818 | 6.643687 | 0.094224 |

| 7 | uplift_score_nn | 8 | 0.40 | 854 | 3600 | 259 | 4186 | 631.257525 | 7.013973 | 0.077188 |

| 8 | uplift_score_nn | 9 | 0.45 | 893 | 4050 | 271 | 4696 | 659.279813 | 7.325331 | 0.063137 |

| 9 | uplift_score_nn | 10 | 0.50 | 931 | 4500 | 282 | 5210 | 687.429942 | 7.638110 | 0.063044 |

| 10 | uplift_score_nn | 11 | 0.55 | 969 | 4950 | 294 | 5668 | 712.242766 | 7.913809 | 0.058244 |

| 11 | uplift_score_nn | 12 | 0.60 | 987 | 5400 | 299 | 6090 | 721.876847 | 8.020854 | 0.028152 |

| 12 | uplift_score_nn | 13 | 0.65 | 1003 | 5850 | 304 | 6539 | 731.031809 | 8.122576 | 0.024420 |

| 13 | uplift_score_nn | 14 | 0.70 | 1025 | 6300 | 306 | 6926 | 746.657522 | 8.296195 | 0.043721 |

| 14 | uplift_score_nn | 15 | 0.75 | 1037 | 6750 | 311 | 7387 | 752.818329 | 8.364648 | 0.015821 |

| 15 | uplift_score_nn | 16 | 0.80 | 1052 | 7200 | 319 | 7799 | 757.500705 | 8.416675 | 0.013916 |

| 16 | uplift_score_nn | 17 | 0.85 | 1063 | 7650 | 326 | 8249 | 760.672445 | 8.451916 | 0.008889 |

| 17 | uplift_score_nn | 18 | 0.90 | 1087 | 8100 | 353 | 8631 | 755.717414 | 8.396860 | -0.017347 |

| 18 | uplift_score_nn | 19 | 0.95 | 1138 | 8550 | 431 | 8846 | 721.421886 | 8.015799 | -0.249457 |

| 19 | uplift_score_nn | 20 | 1.00 | 1174 | 9000 | 512 | 9000 | 662.000000 | 7.355556 | -0.445974 |

This pattern indicates that the first segments are highly responsive to the campaign, while the later segments may have been negatively influenced by the campaign or would have been better off not being targeted at all.

| pred | bins | cum_prop | T_resp | T_n | C_resp | C_n | incremental_resp | inc_uplift | uplift | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | pred_treatment_nn | 1 | 0.05 | 201 | 450 | 74 | 586 | 144.174061 | 1.601934 | 0.320387 |

| 1 | pred_treatment_nn | 2 | 0.10 | 351 | 900 | 140 | 1076 | 233.899628 | 2.598885 | 0.198639 |

| 2 | pred_treatment_nn | 3 | 0.15 | 475 | 1350 | 178 | 1561 | 321.060218 | 3.567336 | 0.197205 |

| 3 | pred_treatment_nn | 4 | 0.20 | 589 | 1800 | 220 | 2044 | 395.262231 | 4.391803 | 0.166377 |

| 4 | pred_treatment_nn | 5 | 0.25 | 686 | 2250 | 257 | 2497 | 454.422107 | 5.049135 | 0.133878 |

| 5 | pred_treatment_nn | 6 | 0.30 | 765 | 2700 | 293 | 2962 | 497.916948 | 5.532411 | 0.098136 |

| 6 | pred_treatment_nn | 7 | 0.35 | 833 | 3150 | 346 | 3352 | 507.850835 | 5.642787 | 0.015214 |

| 7 | pred_treatment_nn | 8 | 0.40 | 896 | 3600 | 396 | 3698 | 510.494321 | 5.672159 | -0.004509 |

| 8 | pred_treatment_nn | 9 | 0.45 | 941 | 4050 | 444 | 4013 | 492.906305 | 5.476737 | -0.052381 |

| 9 | pred_treatment_nn | 10 | 0.50 | 982 | 4500 | 477 | 4398 | 493.937244 | 5.488192 | 0.005397 |

| 10 | pred_treatment_nn | 11 | 0.55 | 1023 | 4950 | 486 | 4870 | 529.016427 | 5.877960 | 0.072043 |

| 11 | pred_treatment_nn | 12 | 0.60 | 1053 | 5400 | 491 | 5383 | 560.449378 | 6.227215 | 0.056920 |

| 12 | pred_treatment_nn | 13 | 0.65 | 1083 | 5850 | 496 | 5908 | 591.869330 | 6.576326 | 0.057143 |

| 13 | pred_treatment_nn | 14 | 0.70 | 1102 | 6300 | 503 | 6352 | 603.117758 | 6.701308 | 0.026456 |

| 14 | pred_treatment_nn | 15 | 0.75 | 1116 | 6750 | 505 | 6835 | 617.280176 | 6.858669 | 0.026970 |

| 15 | pred_treatment_nn | 16 | 0.80 | 1131 | 7200 | 506 | 7272 | 630.009901 | 7.000110 | 0.031045 |

| 16 | pred_treatment_nn | 17 | 0.85 | 1143 | 7650 | 507 | 7657 | 636.463497 | 7.071817 | 0.024069 |

| 17 | pred_treatment_nn | 18 | 0.90 | 1161 | 8100 | 509 | 8123 | 653.441216 | 7.260458 | 0.035708 |

| 18 | pred_treatment_nn | 19 | 0.95 | 1170 | 8550 | 510 | 8541 | 659.462592 | 7.327362 | 0.017608 |

| 19 | pred_treatment_nn | 20 | 1.00 | 1174 | 9000 | 512 | 9000 | 662.000000 | 7.355556 | 0.004532 |

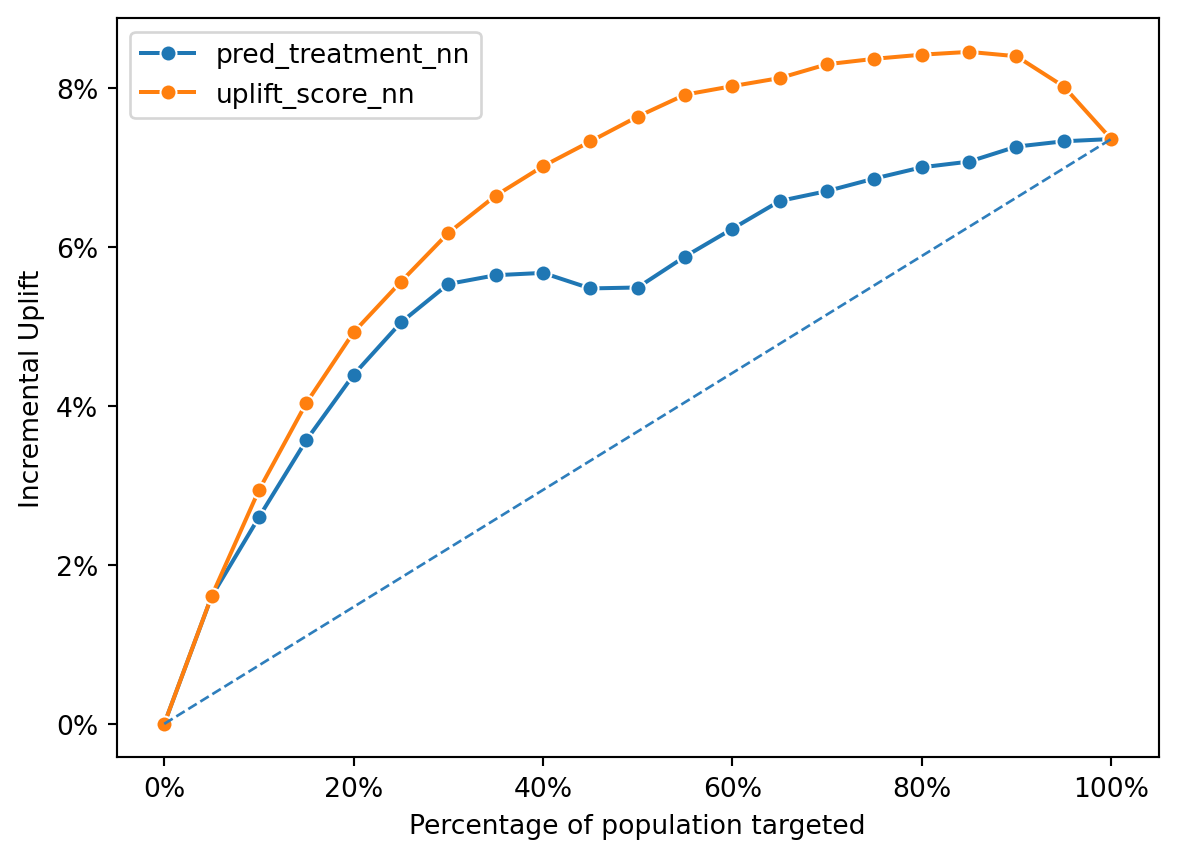

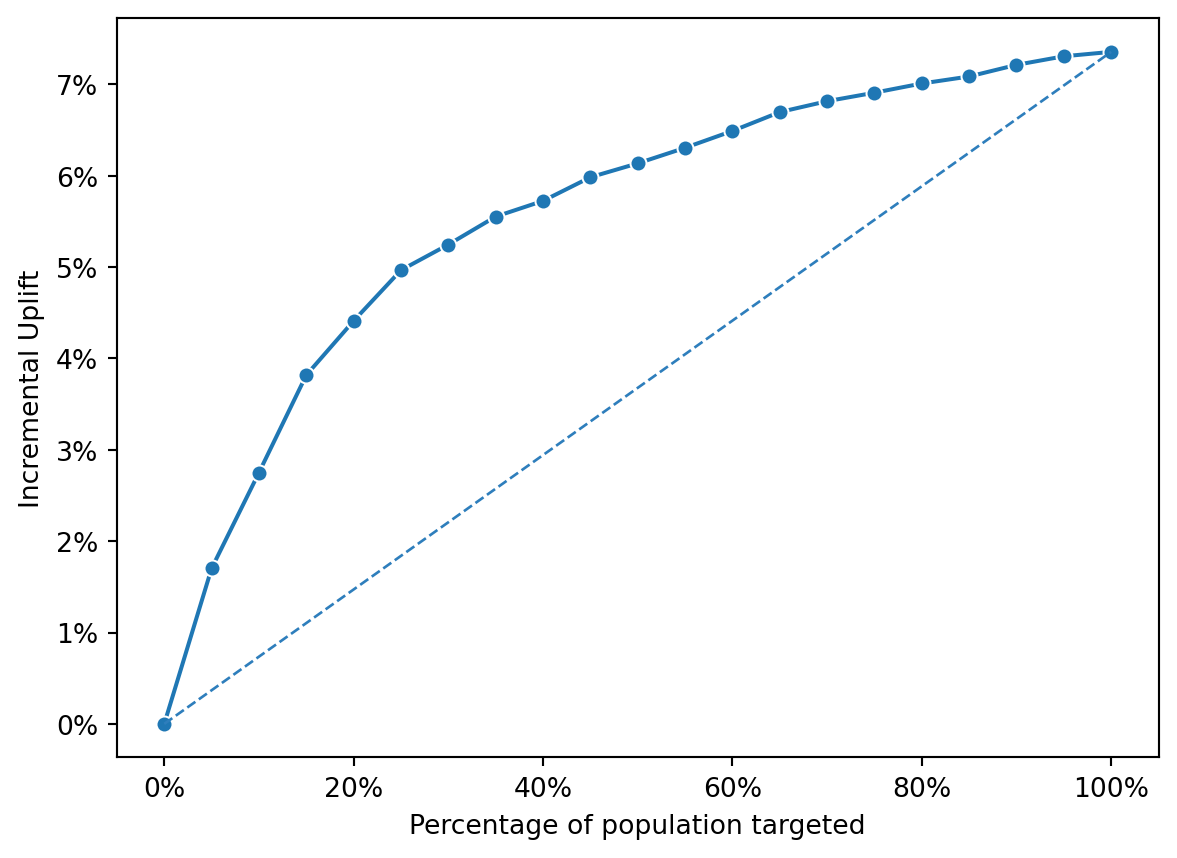

The uplift_score_nn line generally lies above the pred_treatment_nn line, indicating that the uplift model predicts a higher incremental uplift across the different segments of the targeted population.

Both lines show a rise in incremental uplift with an increase in the targeted population, reaching a peak, and then beginning to plateau, suggesting a point of diminishing returns.

The uplift model’s curve suggests that targeting based on its scores leads to higher incremental gains compared to the propensity model, which is likely predicting the likelihood of response to the treatment without considering the control group’s response.

The uplift decreases from the first to the last segment, which suggests that the initial segments are the most responsive to the targeting. The treatment model shows positive uplift in the early segments but drops off more sharply than the uplift model in later segments, indicating that the treatment model might be less effective at distinguishing between those who will respond due to the treatment and those who would have responded anyway.

The uplift model has a more gradual decline in uplift across segments and less negative uplift in the lower segments, which could imply that it is more effective at targeting the right individuals.

The negative values in later segments for both models suggest that certain individuals are either not influenced by or negatively influenced by the treatment. This could represent individuals who might purchase or respond anyway, so the propensity might have been an unnecessary expense for this group, or it could represent a group for whom the treatment had an adverse effect.

45823.831691362975Random Forest

Data : cg_rct_stacked

Response variable : converted

Level : yes

Explanatory variables: GameLevel, NumGameDays, NumGameDays4Plus, NumInGameMessagesSent, NumFriends, NumFriendRequestIgnored, NumSpaceHeroBadges, AcquiredSpaceship, AcquiredIonWeapon, TimesLostSpaceship, TimesKilled, TimesCaptain, TimesNavigator, PurchasedCoinPackSmall, PurchasedCoinPackLarge, NumAdsClicked, DaysUser, UserConsole, UserHasOldOS

OOB : True

Model type : classification

Nr. of features : (19, 19)

Nr. of observations : 21,000

max_features : sqrt (4)

n_estimators : 100

min_samples_leaf : 1

random_state : 1234

AUC : 0.761

Estimation data :

GameLevel NumGameDays NumGameDays4Plus NumInGameMessagesSent NumFriends NumFriendRequestIgnored NumSpaceHeroBadges TimesLostSpaceship TimesKilled TimesCaptain TimesNavigator NumAdsClicked DaysUser AcquiredSpaceship_yes AcquiredIonWeapon_yes PurchasedCoinPackSmall_yes PurchasedCoinPackLarge_yes UserConsole_yes UserHasOldOS_yes

5 15 0 179 362 50 0 22 0 4 4 2 1308 True False False False True False

4 4 0 36 0 0 0 0 0 0 0 2 2922 False False False False True False

8 17 0 222 20 63 10 10 0 9 6 4 2192 True False True False True False

10 18 2 0 56 6 2 1 0 0 0 13 2313 False False False True True False

10 20 5 36 0 16 0 0 0 0 0 9 1766 False False False True True Falsemax_features = [None, 'auto', 'sqrt', 'log2', 0.25, 0.5, 0.75, 1.0, 2.0, 3.0, 4.0]

n_estimators = [10, 50, 100, 200, 500, 1000]

param_grid = {"max_features": max_features, "n_estimators": n_estimators}

scoring = {"AUC": "roc_auc"}

rf_cv_treatment = GridSearchCV(rf_treatment.fitted, param_grid, scoring=scoring, cv=5, n_jobs=4, refit="AUC", verbose=5)Fitting 5 folds for each of 66 candidates, totalling 330 fits

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(1,); AUC: (test=0.711) total time= 0.2s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(2,); AUC: (test=0.755) total time= 1.3s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.755) total time= 7.4s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(3, 3); AUC: (test=0.773) total time= 14.7s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(4, 2); AUC: (test=0.777) total time= 14.7s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(5, 5); AUC: (test=0.766) total time= 20.0s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(5, 5); AUC: (test=0.769) total time= 13.5s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(5,); AUC: (test=0.791) total time= 2.5s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.759) total time= 2.1s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.712) total time= 2.8s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(5, 10); AUC: (test=0.752) total time= 1.5min

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(10, 5); AUC: (test=0.726) total time= 37.2s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(1,); AUC: (test=0.699) total time= 0.1s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(2,); AUC: (test=0.737) total time= 1.1s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(2,); AUC: (test=0.753) total time= 3.4s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(3,); AUC: (test=0.755) total time= 10.0s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(3, 3); AUC: (test=0.777) total time= 33.6s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(4, 2); AUC: (test=0.772) total time= 15.7s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(5, 5); AUC: (test=0.779) total time= 21.0s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(5,); AUC: (test=0.763) total time= 1.5s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(5,); AUC: (test=0.774) total time= 1.0s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(5,); AUC: (test=0.774) total time= 5.8s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(10,); AUC: (test=0.768) total time= 2.5s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(5, 10); AUC: (test=0.743) total time= 1.2min

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(10, 5); AUC: (test=0.749) total time= 36.9s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(2,); AUC: (test=0.749) total time= 1.3s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.757) total time= 13.4s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(3, 3); AUC: (test=0.762) total time= 14.7s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(4, 2); AUC: (test=0.775) total time= 7.1s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(5, 5); AUC: (test=0.776) total time= 22.2s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.766) total time= 1.3s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.769) total time= 1.4s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.773) total time= 2.2s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.788) total time= 2.1s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.771) total time= 2.2s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.760) total time= 2.1s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.744) total time= 2.0s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.728) total time= 3.7s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(5, 10); AUC: (test=0.749) total time= 53.4s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(10, 5); AUC: (test=0.740) total time= 23.3s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(10, 5); AUC: (test=0.703) total time= 11.6s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(3, 3); AUC: (test=0.759) total time= 8.4s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(4, 2); AUC: (test=0.778) total time= 17.7s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(4, 2); AUC: (test=0.771) total time= 13.3s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(5, 5); AUC: (test=0.772) total time= 22.4s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(10,); AUC: (test=0.734) total time= 1.6s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(10,); AUC: (test=0.750) total time= 2.6s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(5, 10); AUC: (test=0.786) total time= 33.8s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(10, 5); AUC: (test=0.748) total time= 9.6s

[CV 1/5] END alpha=1, hidden_layer_sizes=(1,); AUC: (test=0.711) total time= 0.1s

[CV 2/5] END alpha=1, hidden_layer_sizes=(1,); AUC: (test=0.719) total time= 0.1s

[CV 3/5] END alpha=1, hidden_layer_sizes=(1,); AUC: (test=0.711) total time= 0.1s

[CV 4/5] END alpha=1, hidden_layer_sizes=(1,); AUC: (test=0.703) total time= 0.1s

[CV 5/5] END alpha=1, hidden_layer_sizes=(1,); AUC: (test=0.699) total time= 0.2s

[CV 1/5] END alpha=1, hidden_layer_sizes=(2,); AUC: (test=0.741) total time= 0.3s

[CV 2/5] END alpha=1, hidden_layer_sizes=(2,); AUC: (test=0.734) total time= 0.2s

[CV 3/5] END alpha=1, hidden_layer_sizes=(2,); AUC: (test=0.738) total time= 0.4s

[CV 4/5] END alpha=1, hidden_layer_sizes=(2,); AUC: (test=0.740) total time= 0.2s

[CV 5/5] END alpha=1, hidden_layer_sizes=(2,); AUC: (test=0.730) total time= 0.4s

[CV 1/5] END alpha=1, hidden_layer_sizes=(3,); AUC: (test=0.744) total time= 0.8s

[CV 5/5] END alpha=1, hidden_layer_sizes=(3,); AUC: (test=0.741) total time= 1.5s

[CV 4/5] END alpha=1, hidden_layer_sizes=(3, 3); AUC: (test=0.770) total time= 2.6s

[CV 1/5] END alpha=1, hidden_layer_sizes=(4, 2); AUC: (test=0.774) total time= 5.2s

[CV 1/5] END alpha=1, hidden_layer_sizes=(5, 5); AUC: (test=0.763) total time= 9.2s

[CV 3/5] END alpha=1, hidden_layer_sizes=(5,); AUC: (test=0.758) total time= 1.0s

[CV 4/5] END alpha=1, hidden_layer_sizes=(5,); AUC: (test=0.767) total time= 0.8s

[CV 1/5] END alpha=1, hidden_layer_sizes=(10,); AUC: (test=0.753) total time= 1.6s

[CV 3/5] END alpha=1, hidden_layer_sizes=(10,); AUC: (test=0.755) total time= 2.6s

[CV 4/5] END alpha=1, hidden_layer_sizes=(5, 10); AUC: (test=0.786) total time= 18.2s

[CV 1/5] END alpha=1, hidden_layer_sizes=(10, 5); AUC: (test=0.753) total time= 6.7s

[CV 5/5] END alpha=1, hidden_layer_sizes=(10, 5); AUC: (test=0.737) total time= 10.5s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(1,); AUC: (test=0.829) total time= 0.1s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(2,); AUC: (test=0.836) total time= 0.3s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.851) total time= 0.4s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(3, 3); AUC: (test=0.834) total time= 1.4s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(4, 2); AUC: (test=0.823) total time= 1.3s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(4, 2); AUC: (test=0.813) total time= 1.7s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(5, 5); AUC: (test=0.795) total time= 3.8s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(5, 5); AUC: (test=0.835) total time= 14.0s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(5, 10); AUC: (test=0.791) total time= 7.3s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(5, 10); AUC: (test=0.852) total time= 34.9s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(5, 5); AUC: (test=0.792) total time= 15.5s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(5, 10); AUC: (test=0.777) total time= 10.1s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(10, 5); AUC: (test=0.798) total time= 9.0s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(10, 5); AUC: (test=0.821) total time= 6.9s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.845) total time= 0.1s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.829) total time= 0.1s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.823) total time= 0.2s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(2,); AUC: (test=0.839) total time= 0.2s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(2,); AUC: (test=0.826) total time= 0.3s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.844) total time= 0.4s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.832) total time= 0.2s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.851) total time= 0.5s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(3, 3); AUC: (test=0.840) total time= 2.0s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(3, 3); AUC: (test=0.843) total time= 2.2s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(3, 3); AUC: (test=0.852) total time= 5.7s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(5, 5); AUC: (test=0.797) total time= 4.0s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.853) total time= 0.3s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.819) total time= 1.2s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.798) total time= 1.4s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(5, 10); AUC: (test=0.813) total time= 13.6s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(10, 5); AUC: (test=0.824) total time= 9.6s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(10, 5); AUC: (test=0.790) total time= 5.1s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(1,); AUC: (test=0.845) total time= 0.1s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(1,); AUC: (test=0.829) total time= 0.1s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(1,); AUC: (test=0.826) total time= 0.1s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(1,); AUC: (test=0.823) total time= 0.1s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(1,); AUC: (test=0.849) total time= 0.1s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(2,); AUC: (test=0.834) total time= 0.3s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(2,); AUC: (test=0.839) total time= 0.2s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(2,); AUC: (test=0.835) total time= 0.2s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(2,); AUC: (test=0.826) total time= 0.4s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(2,); AUC: (test=0.853) total time= 0.3s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.845) total time= 0.3s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.836) total time= 0.3s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.833) total time= 0.2s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.823) total time= 0.5s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.851) total time= 0.3s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(3, 3); AUC: (test=0.840) total time= 1.6s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(3, 3); AUC: (test=0.846) total time= 1.3s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(3, 3); AUC: (test=0.844) total time= 1.8s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(4, 2); AUC: (test=0.832) total time= 0.4s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(4, 2); AUC: (test=0.856) total time= 1.3s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(5, 5); AUC: (test=0.827) total time= 1.9s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(5, 5); AUC: (test=0.842) total time= 2.3s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(5,); AUC: (test=0.850) total time= 0.5s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(5,); AUC: (test=0.835) total time= 0.5s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(5,); AUC: (test=0.835) total time= 1.4s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(5,); AUC: (test=0.827) total time= 0.6s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(5,); AUC: (test=0.852) total time= 0.5s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(10,); AUC: (test=0.856) total time= 1.6s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(10,); AUC: (test=0.823) total time= 1.5s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(10,); AUC: (test=0.864) total time= 2.5s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(5, 10); AUC: (test=0.840) total time= 21.7s

[CV 3/5] END alpha=1, hidden_layer_sizes=(2,); AUC: (test=0.836) total time= 0.2s

[CV 5/5] END alpha=1, hidden_layer_sizes=(2,); AUC: (test=0.855) total time= 0.3s

[CV 2/5] END alpha=1, hidden_layer_sizes=(3,); AUC: (test=0.835) total time= 0.3s

[CV 3/5] END alpha=1, hidden_layer_sizes=(3,); AUC: (test=0.837) total time= 0.5s

[CV 5/5] END alpha=1, hidden_layer_sizes=(3,); AUC: (test=0.854) total time= 0.8s

[CV 2/5] END alpha=1, hidden_layer_sizes=(3, 3); AUC: (test=0.854) total time= 2.3s

[CV 2/5] END alpha=1, hidden_layer_sizes=(4, 2); AUC: (test=0.846) total time= 2.4s

[CV 4/5] END alpha=1, hidden_layer_sizes=(5, 5); AUC: (test=0.825) total time= 2.3s

[CV 1/5] END alpha=1, hidden_layer_sizes=(5,); AUC: (test=0.853) total time= 0.5s

[CV 3/5] END alpha=1, hidden_layer_sizes=(5,); AUC: (test=0.848) total time= 0.6s

[CV 1/5] END alpha=1, hidden_layer_sizes=(10,); AUC: (test=0.847) total time= 1.2s

[CV 4/5] END alpha=1, hidden_layer_sizes=(10,); AUC: (test=0.825) total time= 1.6s

[CV 1/5] END alpha=1, hidden_layer_sizes=(5, 10); AUC: (test=0.825) total time= 3.9s

[CV 1/5] END alpha=1, hidden_layer_sizes=(10, 5); AUC: (test=0.839) total time= 4.1s

[CV 5/5] END alpha=1, hidden_layer_sizes=(10, 5); AUC: (test=0.818) total time= 2.6s

[CV 3/5] END max_features=None, n_estimators=10; AUC: (test=0.719) total time= 0.5s

[CV 1/5] END max_features=None, n_estimators=50; AUC: (test=0.749) total time= 3.0s

[CV 1/5] END max_features=None, n_estimators=100; AUC: (test=0.757) total time= 6.6s

[CV 5/5] END max_features=None, n_estimators=100; AUC: (test=0.752) total time= 6.5s

[CV 4/5] END max_features=None, n_estimators=200; AUC: (test=0.766) total time= 14.8s

[CV 3/5] END max_features=None, n_estimators=500; AUC: (test=0.765) total time= 31.9s

[CV 2/5] END max_features=None, n_estimators=1000; AUC: (test=0.763) total time= 1.1min

[CV 1/5] END max_features=auto, n_estimators=10; AUC: (test=nan) total time= 0.0s

[CV 2/5] END max_features=auto, n_estimators=10; AUC: (test=nan) total time= 0.0s

[CV 3/5] END max_features=auto, n_estimators=10; AUC: (test=nan) total time= 0.0s

[CV 4/5] END max_features=auto, n_estimators=10; AUC: (test=nan) total time= 0.0s

[CV 5/5] END max_features=auto, n_estimators=10; AUC: (test=nan) total time= 0.0s

[CV 1/5] END max_features=auto, n_estimators=50; AUC: (test=nan) total time= 0.0s

[CV 2/5] END max_features=auto, n_estimators=50; AUC: (test=nan) total time= 0.0s

[CV 3/5] END max_features=auto, n_estimators=50; AUC: (test=nan) total time= 0.0s

[CV 4/5] END max_features=auto, n_estimators=50; AUC: (test=nan) total time= 0.0s

[CV 5/5] END max_features=auto, n_estimators=50; AUC: (test=nan) total time= 0.0s

[CV 1/5] END max_features=auto, n_estimators=100; AUC: (test=nan) total time= 0.0s

[CV 2/5] END max_features=auto, n_estimators=100; AUC: (test=nan) total time= 0.0s

[CV 3/5] END max_features=auto, n_estimators=100; AUC: (test=nan) total time= 0.0s

[CV 4/5] END max_features=auto, n_estimators=100; AUC: (test=nan) total time= 0.0s

[CV 5/5] END max_features=auto, n_estimators=100; AUC: (test=nan) total time= 0.0s

[CV 1/5] END max_features=auto, n_estimators=200; AUC: (test=nan) total time= 0.0s

[CV 2/5] END max_features=auto, n_estimators=200; AUC: (test=nan) total time= 0.0s

[CV 3/5] END max_features=auto, n_estimators=200; AUC: (test=nan) total time= 0.0s

[CV 4/5] END max_features=auto, n_estimators=200; AUC: (test=nan) total time= 0.0s

[CV 5/5] END max_features=auto, n_estimators=200; AUC: (test=nan) total time= 0.0s

[CV 1/5] END max_features=auto, n_estimators=500; AUC: (test=nan) total time= 0.0s

[CV 2/5] END max_features=auto, n_estimators=500; AUC: (test=nan) total time= 0.0s

[CV 3/5] END max_features=auto, n_estimators=500; AUC: (test=nan) total time= 0.0s

[CV 4/5] END max_features=auto, n_estimators=500; AUC: (test=nan) total time= 0.0s

[CV 5/5] END max_features=auto, n_estimators=500; AUC: (test=nan) total time= 0.0s

[CV 1/5] END max_features=auto, n_estimators=1000; AUC: (test=nan) total time= 0.0s

[CV 2/5] END max_features=auto, n_estimators=1000; AUC: (test=nan) total time= 0.0s

[CV 3/5] END max_features=auto, n_estimators=1000; AUC: (test=nan) total time= 0.0s

[CV 4/5] END max_features=auto, n_estimators=1000; AUC: (test=nan) total time= 0.0s

[CV 5/5] END max_features=auto, n_estimators=1000; AUC: (test=nan) total time= 0.0s

[CV 1/5] END max_features=sqrt, n_estimators=10; AUC: (test=0.721) total time= 0.2s

[CV 2/5] END max_features=sqrt, n_estimators=10; AUC: (test=0.714) total time= 0.2s

[CV 3/5] END max_features=sqrt, n_estimators=10; AUC: (test=0.711) total time= 0.2s

[CV 4/5] END max_features=sqrt, n_estimators=10; AUC: (test=0.697) total time= 0.2s

[CV 5/5] END max_features=sqrt, n_estimators=10; AUC: (test=0.703) total time= 0.2s

[CV 1/5] END max_features=sqrt, n_estimators=50; AUC: (test=0.770) total time= 0.9s

[CV 2/5] END max_features=sqrt, n_estimators=50; AUC: (test=0.755) total time= 0.9s

[CV 3/5] END max_features=sqrt, n_estimators=50; AUC: (test=0.772) total time= 0.9s

[CV 4/5] END max_features=sqrt, n_estimators=50; AUC: (test=0.757) total time= 0.9s

[CV 5/5] END max_features=sqrt, n_estimators=50; AUC: (test=0.750) total time= 0.9s

[CV 3/5] END max_features=sqrt, n_estimators=100; AUC: (test=0.780) total time= 1.7s

[CV 4/5] END max_features=sqrt, n_estimators=100; AUC: (test=0.769) total time= 1.7s

[CV 2/5] END max_features=sqrt, n_estimators=200; AUC: (test=0.774) total time= 3.4s

[CV 3/5] END max_features=sqrt, n_estimators=200; AUC: (test=0.782) total time= 3.3s

[CV 1/5] END max_features=sqrt, n_estimators=500; AUC: (test=0.780) total time= 8.5s

[CV 4/5] END max_features=sqrt, n_estimators=500; AUC: (test=0.778) total time= 8.6s

[CV 2/5] END max_features=sqrt, n_estimators=1000; AUC: (test=0.776) total time= 18.1s

[CV 5/5] END max_features=sqrt, n_estimators=1000; AUC: (test=0.766) total time= 17.5s

[CV 1/5] END max_features=log2, n_estimators=500; AUC: (test=0.780) total time= 9.4s

[CV 5/5] END max_features=log2, n_estimators=500; AUC: (test=0.765) total time= 8.9s

[CV 4/5] END max_features=log2, n_estimators=1000; AUC: (test=0.779) total time= 17.3s

[CV 2/5] END max_features=0.25, n_estimators=200; AUC: (test=0.774) total time= 3.8s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(1,); AUC: (test=0.703) total time= 0.2s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(2,); AUC: (test=0.758) total time= 2.7s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.731) total time= 0.4s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.761) total time= 1.0s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.750) total time= 3.3s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(3, 3); AUC: (test=0.760) total time= 17.8s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(4, 2); AUC: (test=0.780) total time= 16.6s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(5, 5); AUC: (test=0.775) total time= 26.1s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(5,); AUC: (test=0.761) total time= 2.1s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(5,); AUC: (test=0.767) total time= 1.0s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(5,); AUC: (test=0.773) total time= 1.6s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(5,); AUC: (test=0.770) total time= 1.6s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.761) total time= 3.0s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(5, 10); AUC: (test=0.764) total time= 37.7s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(5, 10); AUC: (test=0.746) total time= 55.1s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(10, 5); AUC: (test=0.738) total time= 26.8s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(10, 5); AUC: (test=0.706) total time= 8.9s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(1,); AUC: (test=0.711) total time= 0.1s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(1,); AUC: (test=0.719) total time= 0.1s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(1,); AUC: (test=0.711) total time= 0.1s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(1,); AUC: (test=0.703) total time= 0.1s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(2,); AUC: (test=0.756) total time= 1.8s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(2,); AUC: (test=0.756) total time= 1.4s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(2,); AUC: (test=0.753) total time= 1.8s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(3,); AUC: (test=0.730) total time= 0.5s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(3,); AUC: (test=0.761) total time= 0.7s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(3,); AUC: (test=0.718) total time= 1.7s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(3,); AUC: (test=0.750) total time= 4.3s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(3, 3); AUC: (test=0.760) total time= 25.1s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(4, 2); AUC: (test=0.781) total time= 19.3s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(5, 5); AUC: (test=0.772) total time= 32.8s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(5,); AUC: (test=0.773) total time= 1.5s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(10,); AUC: (test=0.758) total time= 3.4s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(10,); AUC: (test=0.720) total time= 2.8s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(5, 10); AUC: (test=0.727) total time= 1.3min

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(10, 5); AUC: (test=0.742) total time= 13.2s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(10, 5); AUC: (test=0.739) total time= 13.2s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.711) total time= 0.1s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.719) total time= 0.1s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.712) total time= 0.1s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.703) total time= 0.1s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(1,); AUC: (test=0.699) total time= 0.1s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(2,); AUC: (test=0.756) total time= 1.0s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(2,); AUC: (test=0.737) total time= 0.5s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(2,); AUC: (test=0.760) total time= 1.7s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.731) total time= 0.6s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.758) total time= 0.6s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.719) total time= 0.8s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(3,); AUC: (test=0.751) total time= 7.5s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(3, 3); AUC: (test=0.760) total time= 10.9s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(4, 2); AUC: (test=0.775) total time= 7.8s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(4, 2); AUC: (test=0.787) total time= 20.3s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(5, 5); AUC: (test=0.781) total time= 32.8s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(5, 10); AUC: (test=0.753) total time= 40.0s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(5, 10); AUC: (test=0.746) total time= 35.2s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.755) total time= 2.9s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.724) total time= 0.4s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(3,); AUC: (test=0.744) total time= 3.4s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(3, 3); AUC: (test=0.774) total time= 10.2s

[CV 2/5] END alpha=0.1, hidden_layer_sizes=(4, 2); AUC: (test=0.771) total time= 8.9s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(4, 2); AUC: (test=0.788) total time= 20.2s

[CV 4/5] END alpha=0.1, hidden_layer_sizes=(5, 5); AUC: (test=0.778) total time= 20.7s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(10,); AUC: (test=0.763) total time= 2.6s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(5, 10); AUC: (test=0.756) total time= 22.9s

[CV 1/5] END alpha=0.1, hidden_layer_sizes=(10, 5); AUC: (test=0.748) total time= 20.1s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(10, 5); AUC: (test=0.716) total time= 4.5s

[CV 3/5] END alpha=1, hidden_layer_sizes=(3,); AUC: (test=0.739) total time= 0.6s

[CV 1/5] END alpha=1, hidden_layer_sizes=(3, 3); AUC: (test=0.773) total time= 2.9s

[CV 5/5] END alpha=1, hidden_layer_sizes=(3, 3); AUC: (test=0.755) total time= 4.4s

[CV 4/5] END alpha=1, hidden_layer_sizes=(4, 2); AUC: (test=0.775) total time= 3.5s

[CV 3/5] END alpha=1, hidden_layer_sizes=(5, 5); AUC: (test=0.773) total time= 11.0s

[CV 4/5] END alpha=1, hidden_layer_sizes=(10,); AUC: (test=0.749) total time= 2.2s

[CV 3/5] END alpha=1, hidden_layer_sizes=(5, 10); AUC: (test=0.768) total time= 18.0s

[CV 5/5] END alpha=1, hidden_layer_sizes=(5, 10); AUC: (test=0.762) total time= 14.5s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(1,); AUC: (test=0.823) total time= 0.1s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(2,); AUC: (test=0.839) total time= 0.2s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.832) total time= 0.2s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.824) total time= 0.2s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(3, 3); AUC: (test=0.839) total time= 2.3s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(3, 3); AUC: (test=0.847) total time= 0.7s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(4, 2); AUC: (test=0.872) total time= 4.0s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(5, 5); AUC: (test=0.812) total time= 4.6s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(5,); AUC: (test=0.845) total time= 0.5s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.861) total time= 1.4s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.794) total time= 1.7s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.871) total time= 2.1s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(5, 10); AUC: (test=0.802) total time= 12.5s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(10, 5); AUC: (test=0.797) total time= 15.1s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(10, 5); AUC: (test=0.771) total time= 13.1s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(4, 2); AUC: (test=0.817) total time= 0.9s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(4, 2); AUC: (test=0.806) total time= 2.9s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(5, 5); AUC: (test=0.815) total time= 7.2s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(5,); AUC: (test=0.848) total time= 0.5s

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(10,); AUC: (test=0.815) total time= 1.6s

[CV 5/5] END alpha=0.001, hidden_layer_sizes=(10,); AUC: (test=0.867) total time= 2.2s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(5, 10); AUC: (test=0.807) total time= 12.5s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(10, 5); AUC: (test=0.797) total time= 7.7s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(10, 5); AUC: (test=0.792) total time= 24.4s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(5, 5); AUC: (test=0.817) total time= 3.0s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.847) total time= 0.4s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.833) total time= 0.5s

[CV 4/5] END alpha=0.01, hidden_layer_sizes=(5,); AUC: (test=0.817) total time= 0.6s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.801) total time= 1.7s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(5, 10); AUC: (test=0.795) total time= 11.8s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(5, 10); AUC: (test=0.847) total time= 44.3s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(5, 10); AUC: (test=0.801) total time= 4.0s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(5, 10); AUC: (test=0.838) total time= 8.6s

[CV 3/5] END alpha=0.1, hidden_layer_sizes=(10, 5); AUC: (test=0.800) total time= 4.2s

[CV 5/5] END alpha=0.1, hidden_layer_sizes=(10, 5); AUC: (test=0.804) total time= 4.4s

[CV 3/5] END alpha=1, hidden_layer_sizes=(3, 3); AUC: (test=0.849) total time= 1.5s

[CV 1/5] END alpha=1, hidden_layer_sizes=(4, 2); AUC: (test=0.833) total time= 1.6s

[CV 5/5] END alpha=1, hidden_layer_sizes=(4, 2); AUC: (test=0.849) total time= 0.8s

[CV 3/5] END alpha=1, hidden_layer_sizes=(5, 5); AUC: (test=0.846) total time= 3.1s

[CV 5/5] END alpha=1, hidden_layer_sizes=(5,); AUC: (test=0.852) total time= 1.0s

[CV 3/5] END alpha=1, hidden_layer_sizes=(10,); AUC: (test=0.833) total time= 2.8s

[CV 3/5] END alpha=1, hidden_layer_sizes=(5, 10); AUC: (test=0.835) total time= 4.2s

[CV 2/5] END alpha=1, hidden_layer_sizes=(10, 5); AUC: (test=0.813) total time= 2.6s

[CV 4/5] END alpha=1, hidden_layer_sizes=(10, 5); AUC: (test=0.777) total time= 5.0s

[CV 4/5] END max_features=None, n_estimators=10; AUC: (test=0.712) total time= 0.5s

[CV 3/5] END max_features=None, n_estimators=50; AUC: (test=0.751) total time= 3.0s

[CV 5/5] END max_features=None, n_estimators=50; AUC: (test=0.741) total time= 3.1s

[CV 4/5] END max_features=None, n_estimators=100; AUC: (test=0.765) total time= 7.2s

[CV 3/5] END max_features=None, n_estimators=200; AUC: (test=0.760) total time= 14.0s

[CV 2/5] END max_features=None, n_estimators=500; AUC: (test=0.761) total time= 32.1s

[CV 1/5] END max_features=None, n_estimators=1000; AUC: (test=0.763) total time= 1.1min

[CV 5/5] END max_features=None, n_estimators=1000; AUC: (test=0.751) total time= 60.0s

[CV 2/5] END max_features=log2, n_estimators=50; AUC: (test=0.755) total time= 0.9s

[CV 4/5] END max_features=log2, n_estimators=50; AUC: (test=0.757) total time= 0.9s

[CV 2/5] END max_features=log2, n_estimators=100; AUC: (test=0.766) total time= 1.7s

[CV 4/5] END max_features=log2, n_estimators=100; AUC: (test=0.769) total time= 1.8s

[CV 1/5] END max_features=log2, n_estimators=200; AUC: (test=0.779) total time= 3.8s

[CV 3/5] END max_features=log2, n_estimators=200; AUC: (test=0.782) total time= 3.7s

[CV 2/5] END max_features=log2, n_estimators=500; AUC: (test=0.776) total time= 9.1s

[CV 1/5] END max_features=log2, n_estimators=1000; AUC: (test=0.780) total time= 17.0s

[CV 5/5] END max_features=log2, n_estimators=1000; AUC: (test=0.766) total time= 17.0s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(1,); AUC: (test=0.719) total time= 0.2s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(1,); AUC: (test=0.699) total time= 0.1s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(2,); AUC: (test=0.753) total time= 3.2s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(3,); AUC: (test=0.718) total time= 1.7s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(3, 3); AUC: (test=0.759) total time= 8.8s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(4, 2); AUC: (test=0.775) total time= 18.4s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(4, 2); AUC: (test=0.772) total time= 14.6s

[CV 3/5] END alpha=0.0001, hidden_layer_sizes=(5, 5); AUC: (test=0.769) total time= 27.4s

[CV 2/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.754) total time= 2.6s

[CV 5/5] END alpha=0.0001, hidden_layer_sizes=(10,); AUC: (test=0.756) total time= 3.3s

[CV 4/5] END alpha=0.0001, hidden_layer_sizes=(5, 10); AUC: (test=0.778) total time= 45.9s

[CV 1/5] END alpha=0.0001, hidden_layer_sizes=(10, 5); AUC: (test=0.739) total time= 1.5min

[CV 2/5] END alpha=0.001, hidden_layer_sizes=(3, 3); AUC: (test=0.759) total time= 5.6s

[CV 1/5] END alpha=0.001, hidden_layer_sizes=(4, 2); AUC: (test=0.776) total time= 26.5s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(4, 2); AUC: (test=0.787) total time= 17.4s

[CV 3/5] END alpha=0.001, hidden_layer_sizes=(5, 5); AUC: (test=0.761) total time= 44.8s

[CV 4/5] END alpha=0.001, hidden_layer_sizes=(5, 10); AUC: (test=0.780) total time= 1.8min

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(3, 3); AUC: (test=0.762) total time= 5.6s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(3, 3); AUC: (test=0.758) total time= 18.7s

[CV 1/5] END alpha=0.01, hidden_layer_sizes=(5, 5); AUC: (test=0.766) total time= 24.2s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(5, 5); AUC: (test=0.774) total time= 13.5s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.763) total time= 3.9s

[CV 5/5] END alpha=0.01, hidden_layer_sizes=(10,); AUC: (test=0.748) total time= 2.6s

[CV 2/5] END alpha=0.01, hidden_layer_sizes=(5, 10); AUC: (test=0.739) total time= 59.8s

[CV 3/5] END alpha=0.01, hidden_layer_sizes=(10, 5); AUC: (test=0.731) total time= 13.9s